RAM: the forgotten history

We owe computers not to Charles Babbage, but to the invention of electronically-controlled working memory.

In my 2023 article on the evolution of the calculator, I griped about the way we teach the history of computers. In particular, I felt that we’re giving far too much credit to Charles Babbage. We have known how to make mechanical calculators at least since the 17th century; the concept of programmable machinery predated the English polymath’s writings by a wide margin, too. The remaining bottleneck was mostly technical: past a very modest scale, sprockets and cams made it too cumbersome to shuffle data back and forth within the bowels of a programmable computing machine.

The eventual breakthrough came in the form of electronically-controlled data registers. These circuits allowed computation results to be locked in place and then effortlessly rerouted elsewhere, no matter how complex and sprawling the overall design. In contrast to the musings of Mr. Babbage, the history of this “working memory” has gotten less attention than it deserves. We conflate it with the evolution of bulk data storage and computer I/O — and keep forgetting that RAM constraints were the most significant drag on personal computing until the final years of the 20th century.

The bistable cell

The first practical form of electronic memory was a relay. A relay is essentially a mechanical switch actuated by an electromagnet. Its switching action can be momentary — lasting only as long as the electromagnet is powered — or it can be sustained, latching in the “on” state until a separate “reset” coil is energized.

Latching relays can be used to store bits in a self-evident way; a momentary relay can also be wired to latch by having the relay supply its own power once the contacts are closed. A simple illustration of this principle is shown below:

In this circuit, pressing the “set” button will energize the relay, therefore also closing the parallel relay-actuated switch. The current will continue flowing through this path even after the “set” button is released. To unlatch the circuit, the normally-closed “reset” button must be pressed to interrupt the flow of electricity.

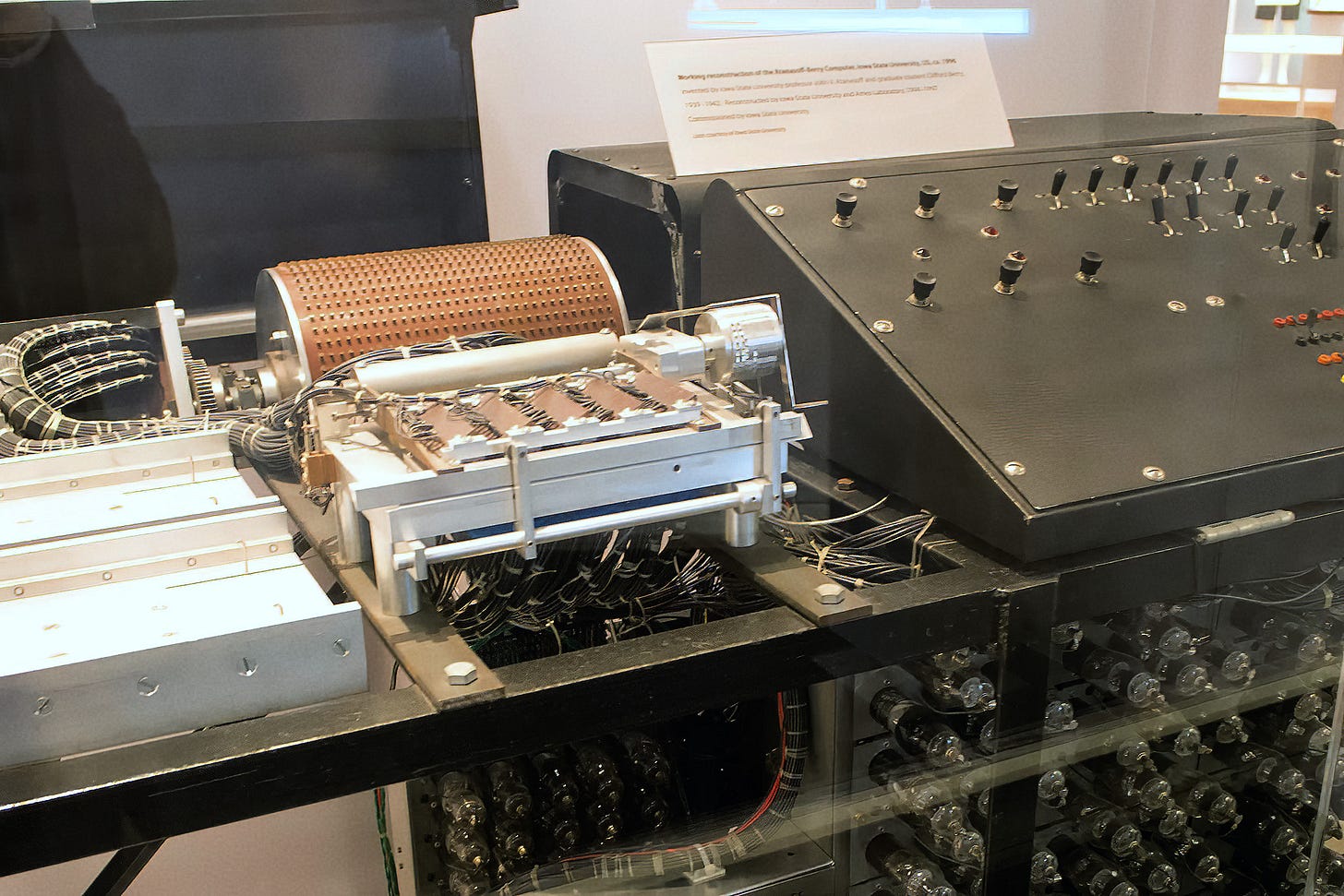

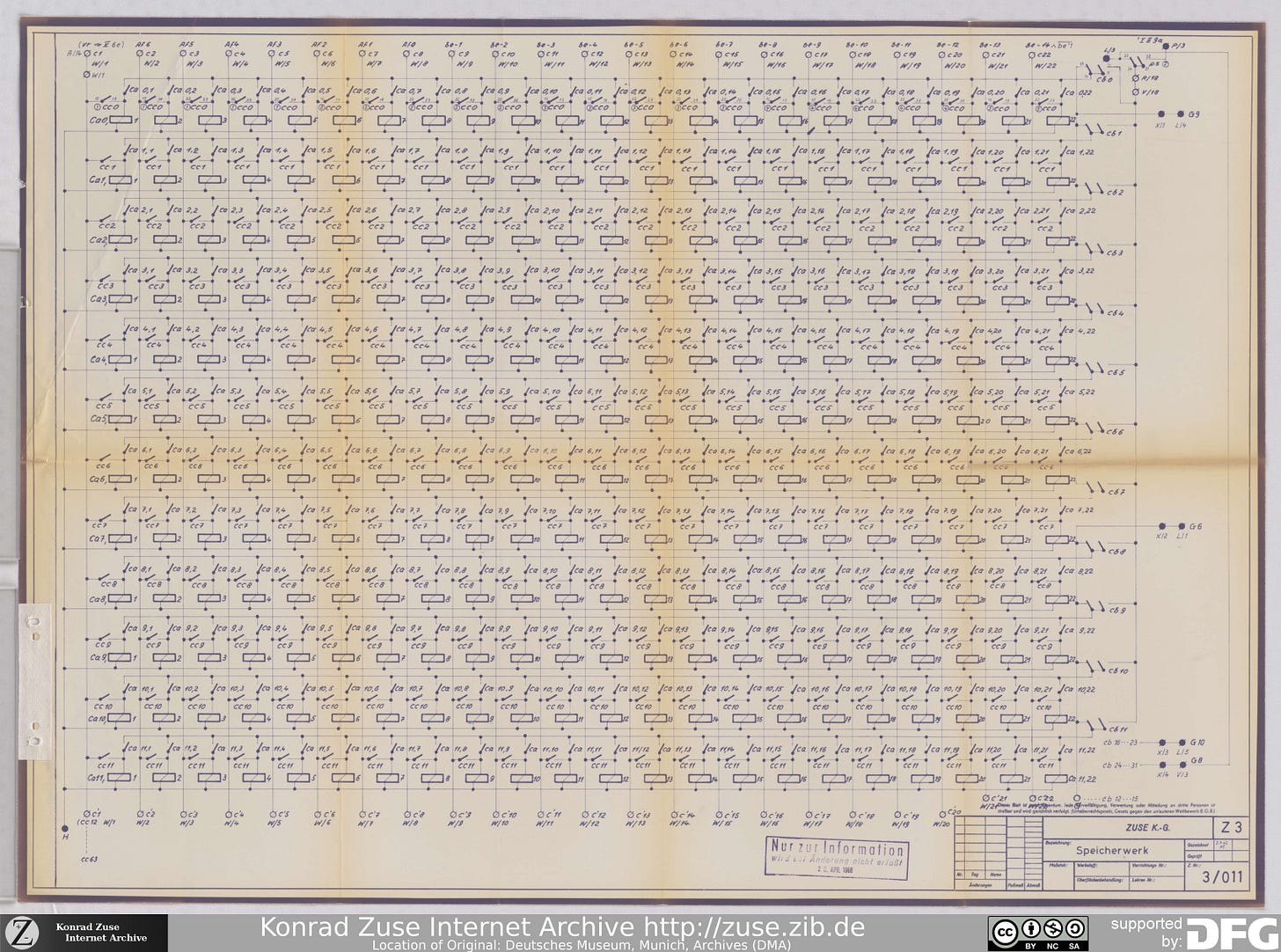

Relay-based main memory featured prominently in what was probably the first true electronic computer, Konrad Zuse’s Z3:

Electromechanical relays have switching speeds measured in tens of hertz; Mr. Zuse understood from the get go that the technology is a dead end. He took the expedient path in hopes of securing longer-term funding from the Nazi regime; unfortunately for him, the officials were unimpressed, and the funding never came.

Zuse’s counterparts on the Allied side had more luck: they were given the license to pursue more costly and complicated vacuum tube designs. The blueprint for a tube-based memory cell came from William Eccles and Frank Wilfred Jordan — a largely-forgotten duo of British inventors who proposed the following bistable circuit as a replacement for an electromechanical relay:

The circuit latches when an input current flows through the transformer winding on the left. This momentarily makes the G1 grid voltage more positive, upsetting the current balance between the tubes in a self-reinforcing feedback loop.

In modern terms, both of these circuits would be described as set-reset (SR) latches. Their fundamental operation is shared with the cells that make up high-speed, low-power SRAM memories found on the dies of most microcontrollers and microprocessors today. A contemporary textbook example of an SR architecture could be this:

To analyze the circuit, let’s consider what happens if the “reset” line is high. In this scenario, one input of the AND gate is always zero, so the circuit outputs “0” no matter what’s happening on the OR side.

Conversely, if “reset” is low, the AND gate works as a pass-through for the OR. As for the OR itself, if “set” is high, it unconditionally outputs “1”. But if “set” is not asserted, the OR passes through the circuit’s previous output value via the feedback loop on top of the schematic. In effect, at S = R = 0, the device is latched and stores a single bit of data representing what previously appeared on the S and R input lines.

We could wrap up here, except for one tiny detail: most vacuum tube computers did not use vacuum tube memory for the bulk of their needs. The reason is clear if we consider that the memory unit in Zuse’s Z3 required about 2,000 relays, compared to 600 for the rest of the machine. The math for the relatively costly tubes was even worse — and each tube needed constant power for the heater filament. In short, until the advent of large-scale integration (LSI) chips in the mid-1970s, main memory usually had to be done some other way.

The merry-go-round

The answer were “dynamic” memories that relied on physical phenomena — say, static electricity or acoustics — to store information in some inexpensive medium. Because the underlying physical process lasted only for a brief while, the data had to be regularly retrieved and refreshed (or retransmitted) again.

One of the earliest examples of this was the Atanasoff-Berry “computer” (ABC) — in reality, a sophisticated but non-programmable calculator that used a spinning drum with an array of mechanically-switched capacitors:

The charge state of each capacitor conveyed digital information; the gradual self-discharge of the cells necessitated regular refresh. This was, in many respects, the precursor to modern-day DRAM. The DRAM chips in our desktop computers and smartphones still use capacitors — we just make them in bulk on a silicon die and have a way to address them electronically. Although this type of memory is slower and more power-hungry than SRAM, the enduring advantage of DRAM is that it requires far fewer components — and thus, less die space — per every bit stored.

But back to early computing: another fascinating incarnation of the idea were delay-line memories. These devices encoded data as sound waves pumped into a length of material and then retrieved on the other side. Because sound takes time to propagate, some number of bits could be “stored” in flight in an electro-acoustic loop. At the same time, because the wave moves pretty briskly, the latency of this system was far better than that of a spinning drum.

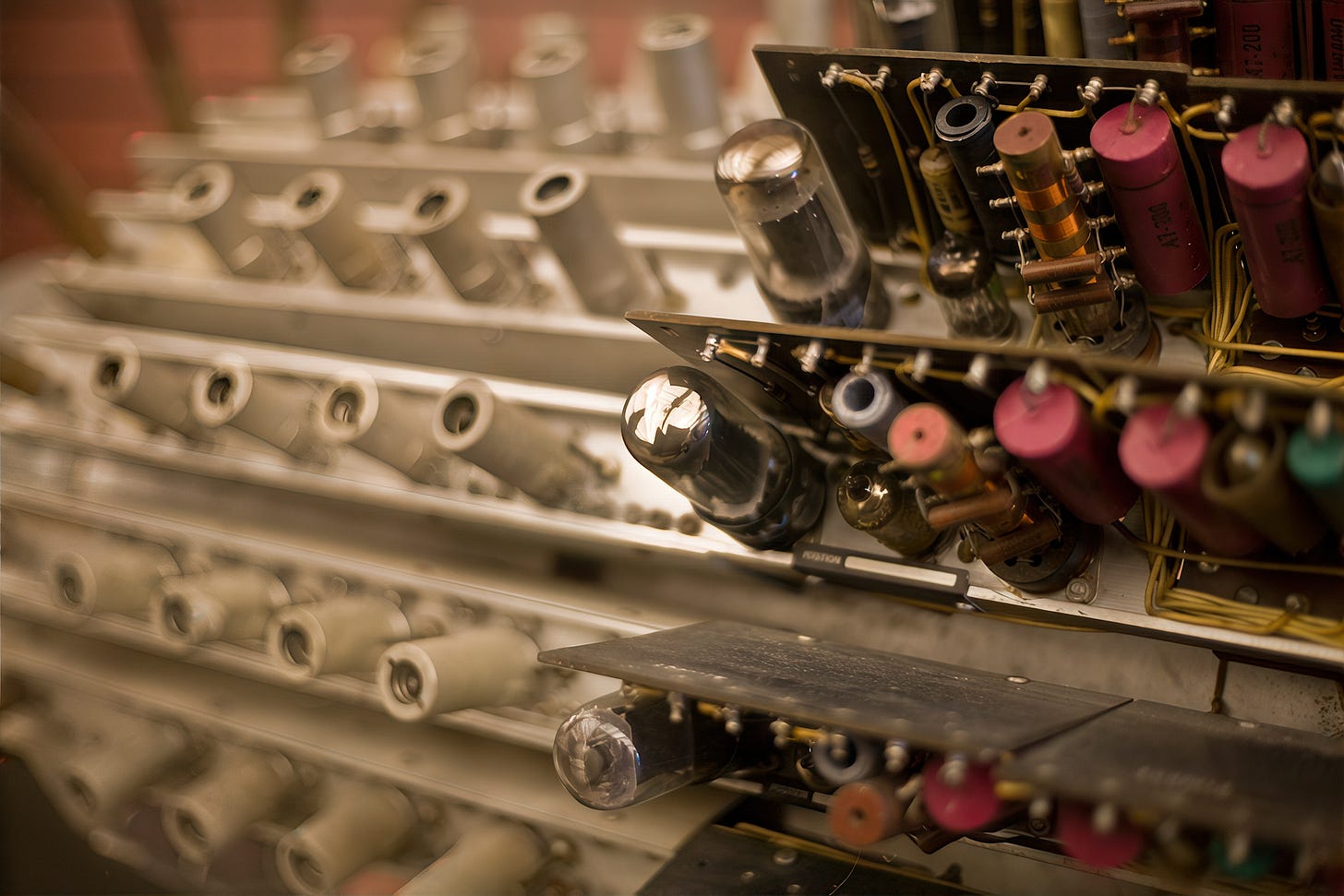

The wackiest example of this genre is the mercury delay line. As the name implies, these devices relied on the toxic, bioaccumulating metal as the medium for sound waves:

Wikipedia will tell you that mercury was chosen because it uniquely matched the acoustic impedance of piezoelectric transducers, but I think that’s a misreading of the original patent (US2629827A). The problem with solid metal was presumably just that the longitudinal speed of sound was much higher than in liquids — so at a given clock speed and column length, you couldn’t store as many bits. Conversely, the issue with less dense and more compressible liquids might have been that they attenuated high frequencies, reducing the maximum transmit rate. All in all, I suspect that mercury simply offered the optimal storage capacity for the era — as long as you didn’t care about safety or weight.

Thankfully, this “mad scientist” period ended the moment someone thought of coiling the metal to accommodate more data in flight. Meet torsion memory:

The approach relied on acoustic waves traveling through spring wire, except it used torsion (twisting) instead of longitudinal (push-pull) actuation. This allowed the wire to be loosely coiled and held in place with rubber spacers; with longitudinal waves, much of the acoustic energy would be lost at every turn.

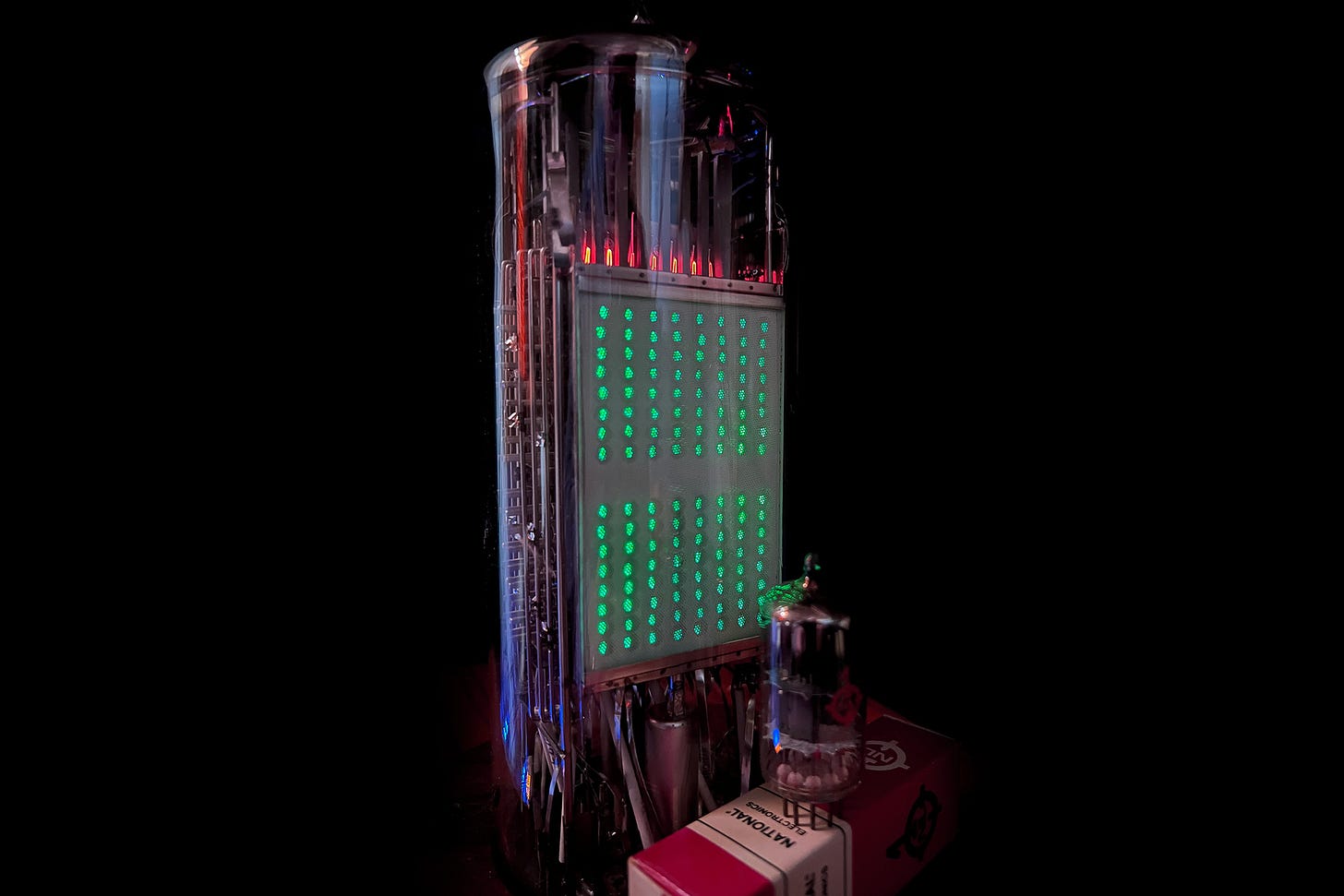

Yet another fascinating class of dynamic memories included the Williams tube and the RCA Selectron tube. Both had no moving parts; the latter device is pictured below:

The devices operated somewhat like a CRT, selectively firing electrons at a phosphor substrate and storing several hundred bits as a pattern of illuminated dots. Although the stored data was visible to the naked eye, the readout relied on sensing changes to electrostatic fields instead. In essence, electrons were getting knocked around in the phosphor layer, creating a net charge which could be picked up for a while by a nearby electrode.

The magnetic era

Today, magnetic media is typically associated with bulk, at-rest data storage: hard disks, floppies, and LTO tapes. But for about two decades — roughly between 1950 and 1970 — magnetic main memories played a major role in computing, too.

The earliest incarnation of this is the magnetic drum memory — an in-between stage between the capacitor-based memory of the Atanasoff-Berry computer and the modern hard drive:

In this design, read and write heads hovered above the surface of a fast-spinning ferromagnetic cylinder. The relatively small diameter of the cylinder, coupled with the high number of parallel heads, kept the read times reasonably short. That said, the computers of that era were already running at multi-megahertz speeds — so the latency of the electromechanical solution was far from ideal. The only advantage of the drum memory was its relatively high capacity, difficult to match with a delay line.

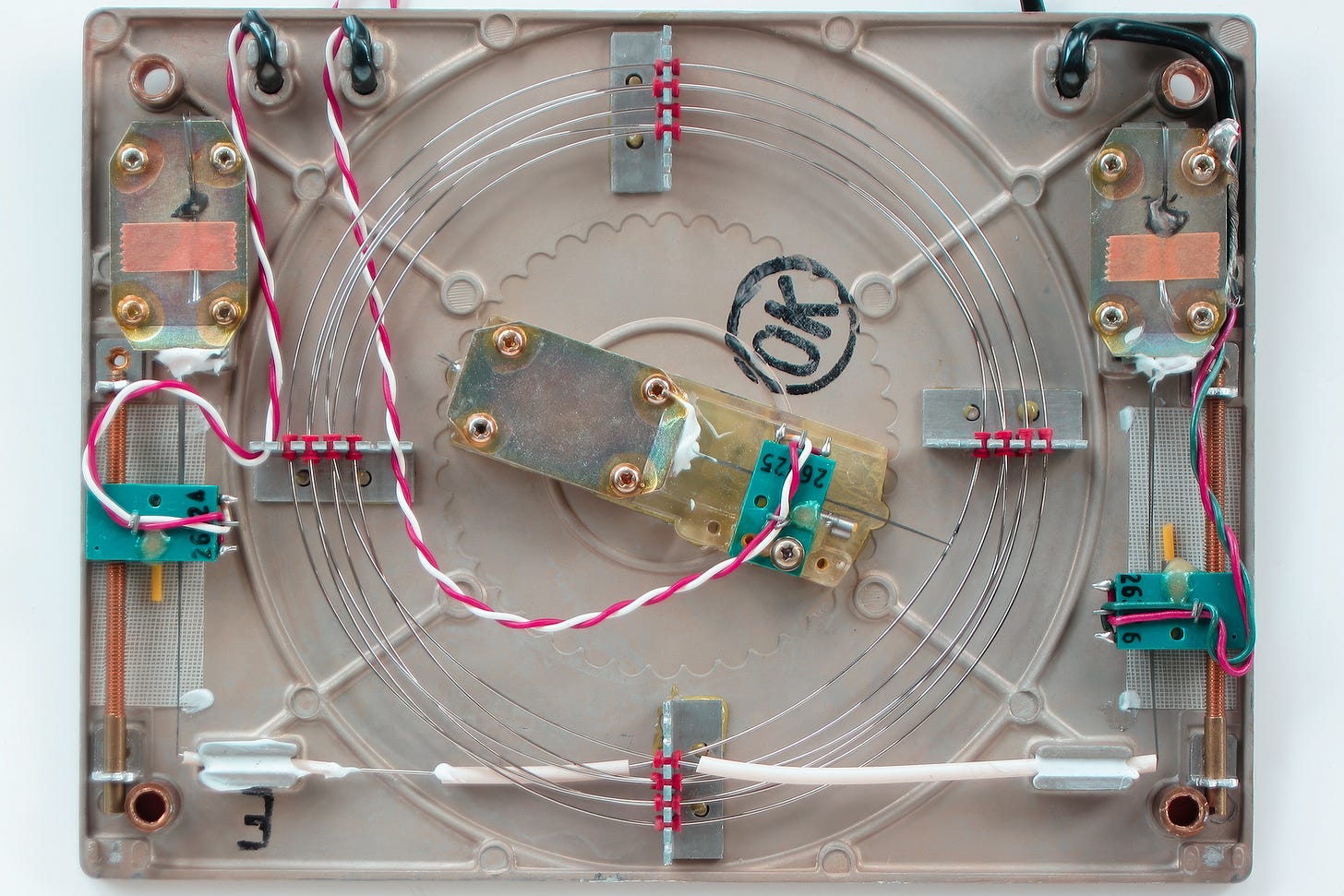

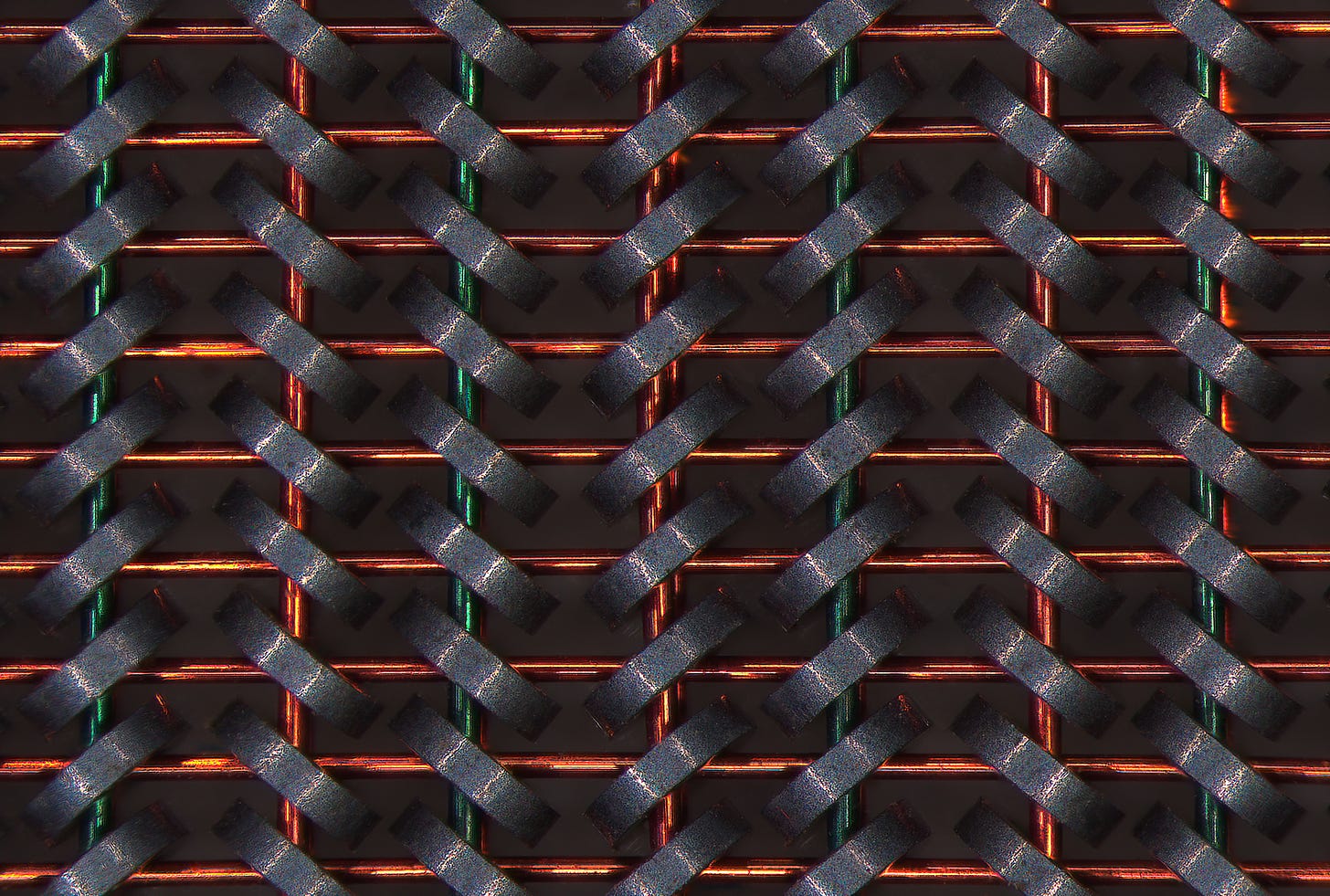

But the pinnacle of the pre-IC era was solid-state magnetic core memory. These devices consisted of an array of microscopic ferrite beads (“cores”) with a pattern of insulated copper wires woven through:

The key property of the cores is that they could be magnetized, but their magnetic polarity flipped only if the magnetizing field exceeded a certain threshold. Below that threshold, they did not respond at all.

To write to a specific cell, a sub-threshold magnetic field would be induced around a specific horizontal wire by supplying a calibrated current through its length. Another sub-threshold field would be created along a vertical wire. At the intersection of the two wires, the intensity of the combined fields would exceed the threshold and “flip” the selected core.

The readout process was a bit more involved: it relied on overwriting a cell and then sensing the induced current that’s expected if the polarity flips. If no current was detected, it meant that the written value must have been the same as what was stored in the cell before.

Magnetic memories were fast and quite tiny; the microscope photo shown above comes from a 32 kB module, about 8x8” in size. And interestingly, the legacy of magnetic RAM still lives on: a company called Everspin Technologies sells magnetoresistive (MRAM) chips for embedded applications in sizes up to 16 MB. The chips behave just like SRAM, except they retain data with no power. At the moment, the tech doesn’t seem to be particularly competitive; but given the meandering path we have taken over the past eight decades, who knows what the future holds?

I write well-researched, original articles about geek culture, computing history, and more. If you like the content, please subscribe. It’s increasingly difficult to stay in touch with readers via social media; my typical post on X is shown to less than 5% of my followers and gets a ~0.2% clickthrough rate.

PS. I'm using "RAM" as a synonym for "immediate working memory". I don't think that the strict definition - "equal access times for all locations" - is very useful nowadays. We wouldn't call SSD drives "RAM", even though they don't exhibit seek times.

Anyway... for folks interested in the tail end of this: the first single-chip SRAM was Intel 3101, which also happened to be the company's first product. It stored 64 bits. It was a fast but tiny memory to be used primarily for data registers.

The first DRAM came from the same company shortly thereafter - Intel 1103. It could store 1 kilobit and had a 2 ms refresh cycle. The 1103 was considerably more complex than the 3101 and required two supply voltages, but it started displacing core memories pretty fast.

Some of the 1103 design highlights are discussed here: https://bitsavers.trailing-edge.com/magazines/Electronics/Electronics_V46_N09_19730426_Intel_1103.pdf

And a manual for early Intel memories is here: http://bitsavers.informatik.uni-stuttgart.de/components/intel/_dataBooks/1973_MemoryDesignHandbook_Aug73.pdf

Great article man! Any more like this that you care to put together will be anxiously awaited and gratefully received! (Sorry, I've been reading a book about the Civil War era and my conversation has taken on a rambling 1850s quality 😏)