Random objects: Intel Edison

A glimpse of the missteps of x86 in the embedded world.

In some of the earlier episodes of “the history of things”, we looked at curiosities ranging from Victorian self-defense devices to ultra-miniature vacuum tubes. But in today’s installment, I’d like to talk about an artifact of a much more recent vintage.

Many readers will recall that in the early 2010s, we were in the heyday of the internet-of-things (IoT) craze. It wasn’t just phones or cars that were getting online; tiny networked computers started cropping up in lightbulbs, thermostats, coffee makers, and vegetable juicers.

The man behind the curtain was ARM Holdings: a little-known company that designed a series of low-power 32-bit CPU cores and then started cheaply licensing them to anyone who wanted to make their own chips. Their early CPUs were by no means great — but they were good enough. All of sudden, you could put Linux and wi-fi inside just about anything, and it only cost you a buck or five.

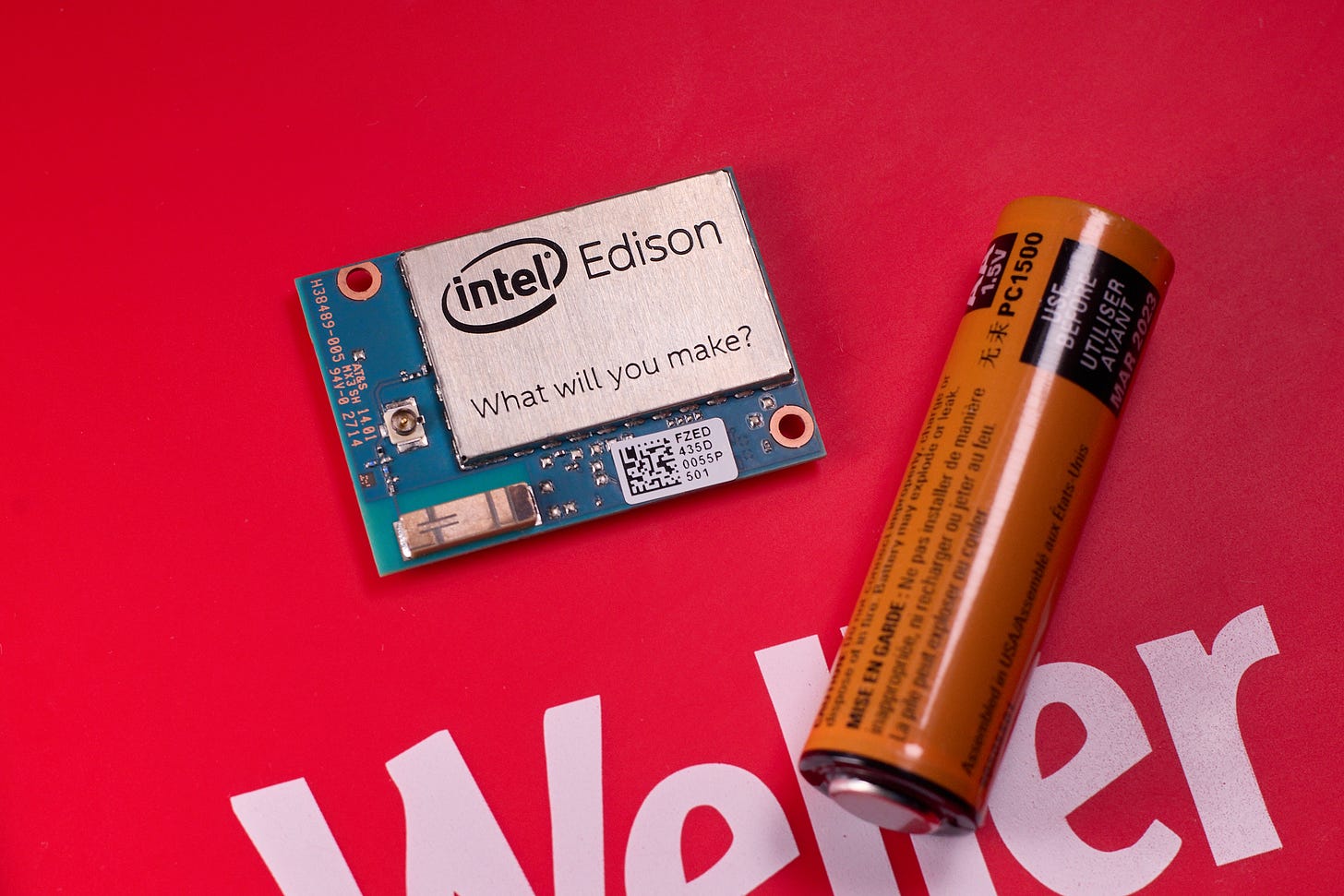

At the time, Intel — the dominant manufacturer of desktop and server processors — was still singularly focused on high-performance devices, including their failed bet on IA-64. But by 2013, they decided they wanted in on the IoT game and released a stripped-down, low-power x86 processor dubbed Intel Quark. The centerpiece of their Quark ecosystem was supposed to be Intel Edison — a wi-fi-enabled dual-core Linux system the size of a post stamp, retailing for about $50:

The device ultimately shipped with a different a different microarchitecture — Silvermont — but it was still selling the same idea: that Intel was a worthwhile alternative to ARM in the embedded space. The platform was ostensibly marketed to hobbyists, and this made a lot of sense: many IoT products were coming out of small shops started by enthusiasts, and hobby single-board computers such as Raspberry Pi or Beagleboard provided a natural on-ramp for ARM devices. But Intel was also pitching it as a “product-ready” module — an idea embodied by the inexplicable “Intel Smart Mug”, showcased during their 2014 CES keynote:

The “product-ready” pitch for Edison wasn’t compelling, especially since it coincided with Espressif releasing a series of wi-fi microcontrollers that retailed for less than one tenth the price. But for hobbyist uses, the module was nothing short of revolutionary: it was much smaller and power-efficient than any other hobby single-board computer you could buy at the time.

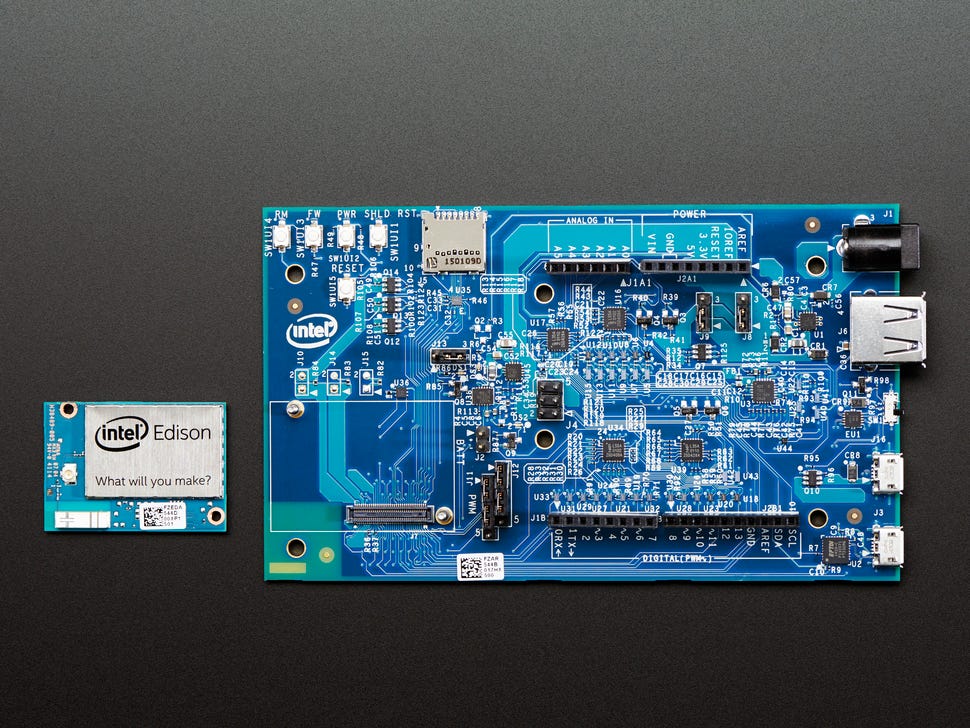

But then, Intel fumbled everything else. To use Edison in hobby applications, you needed a breakout board for the subminiature Hirose DF40 connector in the back. Passive breakout boards were tricky to use because the chip could only tolerate 1.8V on GPIO pins; the primary “active” board marketed by Intel was a $80 “faux Ardino” monstrosity that effectively obliterated all of Edison’s price or size advantages:

It was a death of a thousand cuts on other fronts too. The release-day documentation for Edison was lacking and hard to find on their own website; the factory-preloaded Linux distro was broken so badly that it didn’t even persist wi-fi settings across reboots. Instead of polishing the out-of-box experience, Intel ended up getting sidetracked by weird corporate partnerships. For example, they pursued the support for Mathematica’s Wolfram language — something that I imagine appealed to roughly one user: Stephen Wolfram himself.

Just three years later, Edison was no more. With it, died the small dream of having an expressive assembly language on embedded CPUs.

IMHO the biggest misstep from Intel was to give up on Edison. It was not just a SOC and not just a tinkerboard like RPI or Arduino. It was was a SOM with ROM/RAM/BT/Wifi and might have become a new standardized form factor. As such the use of the Hirose connector did not have to be a showstopper.

After giving up, they potentially missed the phone/tablet market, the IIoT market. And now, potentially may loose the datacenter as well.

It's true that initially Edison was announced as a Quark based SOM. The final version with Silvermont core and Quark MCU would have been perfect for IIoT, Linux connection to the world and RTOS on the Quark for measurement and control.

It may have been that the time was not ripe. Yocto was buggy. The kernel support was not yet upstreamed, Viper OS had no existing market.

How different it is today, PREEMPT_RT just landed for Linux, Viper has turned into Zephyr.

And the Edison support has mostly landed in the kernel, including ACPI (which is a great improvement over all the platform support needed to get Arm based boards running).

Today the support for Edison (by the community) is in quite good state building recent Yocto images with the latest linux kernels. See https://edison-fw.github.io/meta-intel-edison/.

The Edison was a brilliant thing. Today, 10 years later it would still fill a hole in the market (arguably, at a lower price point than the $50 at the time).

There are some inaccuracies in the article. First of all, it's hard to compare an MCU to full-featured 64-bit microcomputer, which Intel Edison is. The resources it provides may not be beaten by poorly performant MCUs, while not consuming so much power. So, there was (still is?) a niche for it for sure. Second, is that Arduino board more likely for DIY prototyping, it definitely not meant for something real. The SparkFun baseboard is what the article missed to show. Last, but not least, Edison is supported by mainline U-Boot and Linux kernel (and Yocto) which was let's say 6 years ago not the case for the majority of the ARM-based boards (all of them require vendor specific heavily patched code).