Spotify: droppin' them fake beats

Infinite streams of machine-generated music are here to assault your remaining senses.

A while back, I made a simple prediction: that barring a radical intervention, in a decade or two, most of interactions on the internet will be with machines that are pretending to be other people. It’s not that I’m prone to habitual pessimism; it’s that our aggregate capacity for human-to-human interaction is inherently capped. In contrast, the ability to generate human-like text, images, and audio is now almost infinitely scalable with generative AI — and from customer support, to marketing, to cybercrime, there are powerful incentives to crank it up to eleven without being entirely upfront with you.

The most popular article on this blog is still my 2022 entry about a machine-generated book I accidentally bought on Amazon. I made the discovery before the release of ChatGPT; since then, machine-generated books, articles, and imagery have swarmed the web. If you want to find real photos on Google Images, the before:2022-01-01 operator is a godsend. The contagion is also spreading to the physical world. For example, off-brand jigsaw puzzles for sale on Amazon now routinely feature misshapen children and animals:

A phenomenon that has gotten much less attention is generative music; machine-generated songs can be created on platforms such as Suno, possibly from nothing more than a single-sentence prompt outlining the desired style and lyrical themes. As with generated images, the technology is impressive; the results are not quite there, but if it’s just playing in the background, you will probably miss the cues.

And so, it was only a matter of time before this band started automatically playing for me on Spotify in the platform’s personalized “release radar” lineup:

“Huh”, I thought to myself. This is some seriously bland, autotuned symphonic metal. And what’s up with that ultra-generic description and the AI-quality cover image?

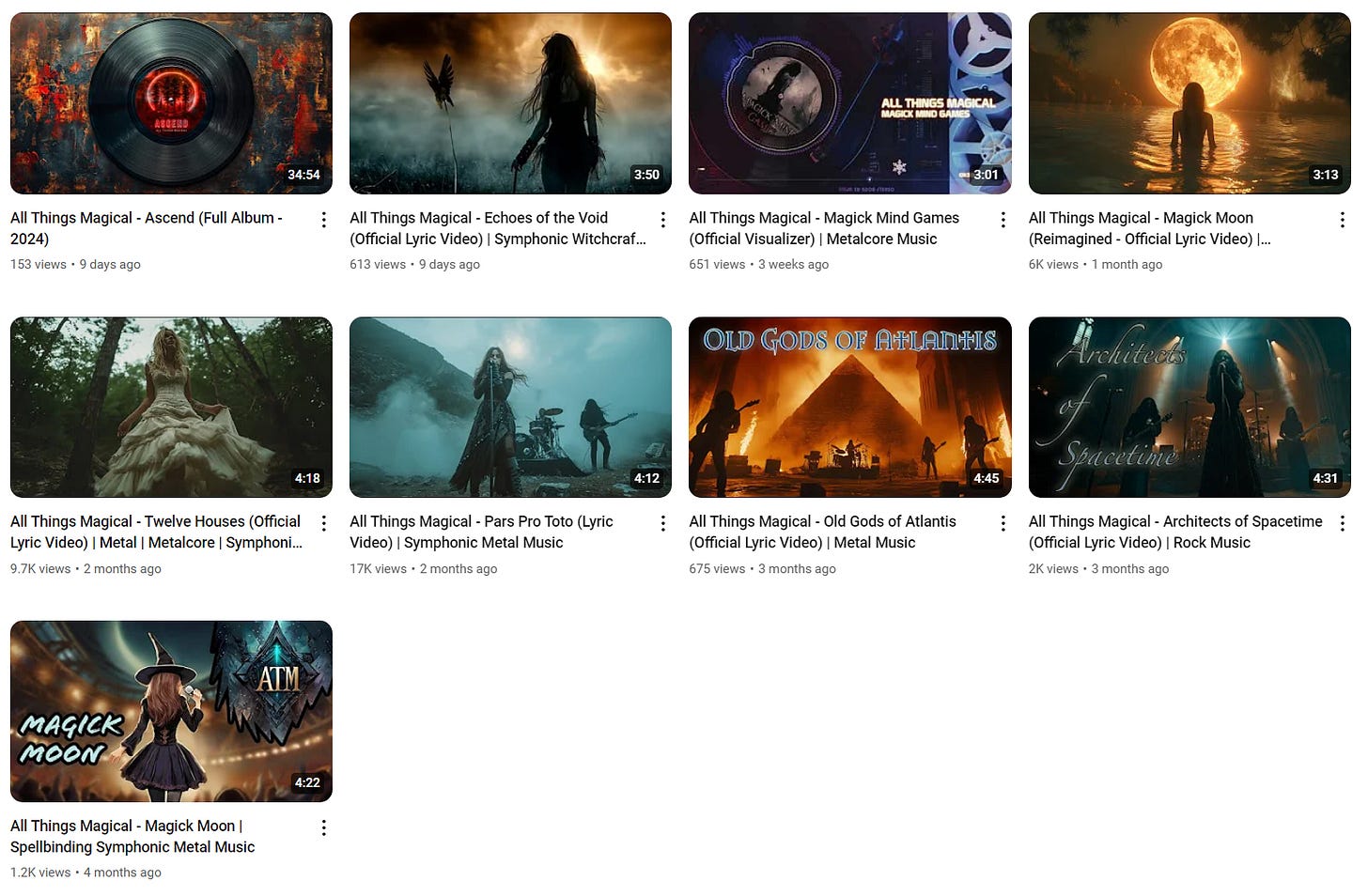

My suspicions aroused, I went to the band’s YouTube profile — 4.4k subscribers! — and discovered a series of videos consisting almost exclusively of stock footage and AI-generated images:

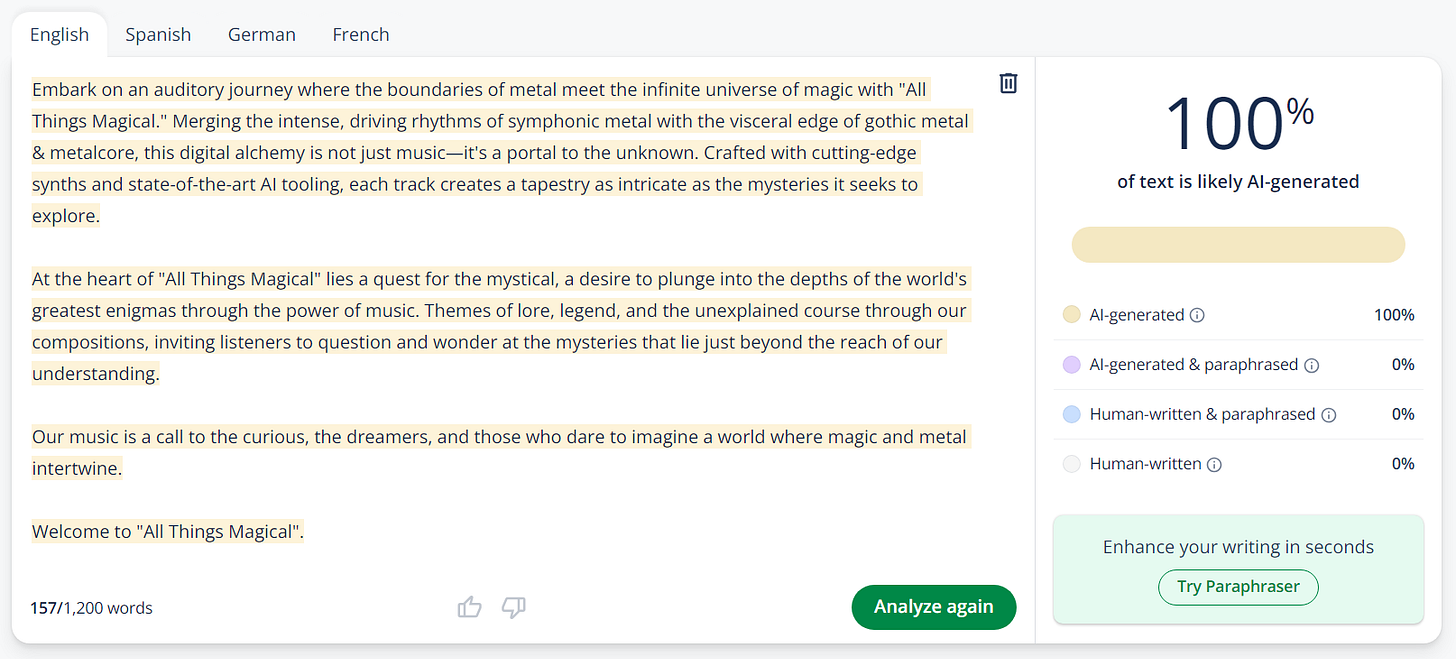

The channel’s description featured this bizarre, ChatGPT-esque passage:

“Disclaimer: Our channel is a space of respect and open-minded exploration into the themes of magic and the occult within the scope of the songtexts. We encourage positive engagement and community spirit in the comments section.”

The vocals sounded inconsistent, the lyrics were comically kitschy, and the appearance of a woman who I presumed was the lead singer changed from clip to clip.

At that point, I posted an exasperated rant on Mastodon; a reader by the name of @moirearty quickly uncovered this remark under one of the early clips:

Credit where credit is due: the author explained what’s going on when asked, and included a passing mention of AI in the below-the-fold summary on Spotify.

I wasn’t looking for any more trouble, but soon thereafter, another recommendation followed — this time, a made-up band with a four-fingered guitarist and no mention of AI anywhere:

It followed a very similar template of bad, inconsistent music combined with evidently auto-generated images and text:

The bottom line is that surreptitious non-human music is here. In the era of automatic playlists and algorithmic feeds, such cheaply-generated infinite shovelware can monetize your behavior without meaningful consent — and without giving you anything worthwhile in return.

I should note that I’m not a neo-Luddite: I don’t mind people using modern tools. I was there when the digital photography revolution happened, and I shed no tears for film. But if the bulk of the creative output is done by a black box, I don’t think it’s right to pretend it’s human work. Finally, the societal externalities of minimal-effort generative content can’t be easily wished away.

PS. Although I included enough info to find the YouTube channel of the "band", please don't harass the creator. While I think they should be more forthcoming in labeling the content as synthetic, it's ultimately up to platforms to give us better control.

I'll also address this HN quip about my HN quip:

>>> Despite some HN quips, AI text detectors are pretty dependable

>> I have never tried AI text detectors, but my impression was that they were considered unreliable?

> They are. The author is wrong.

There is some HN lore rooted in public complaints from people who were sacked from their jobs because someone investigated their writing and it consistently failed such automated checks. The complaints fit neatly into several recurring narratives, so I don't think the reporting gets seriously challenged.

I was quite skeptical of the tooling, but having played with it extensively, I believe the apps tend to be unreliable mostly in the sense that there are prompting tricks to evade detection. I was not able to consistently trigger false positives on human writing - regardless of the writer's language proficiency or style. Further, for the handful of stories where the fired persons' writing was public, it was pretty clear to me that they weren't telling the whole story. In essence: the tech, while not perfect, is pretty good.

[Edit: in a comment below, Ruslan gives an example of a human email that triggered the tool for him, so caveat emptor.]

Hey there, I'm the creator behind your mentioned "band" - I usually call it music project because I don't want to imply anything that it is not! First off, thanks for taking the time to write about this musical experiment. I got a good smile out of your "comically kitschy" description of the lyrics. What can I say? I like it ;-)

Now, about those changing vocals - you're absolutely right. Consistency is a real challenge with the current state of AI audio tools. While we've made leaps in the visual realm (shoutout to Midjourney and of course, Stability AI, who more or less were the first to push image generation to the heights it is today - at least, imo), audio is still playing catch-up. It's a bit like we're in the era of six-fingered images, but for sound.

Fun aside, I want to address a couple of points you raised, because transparency is important to me. As you mentioned, I do disclose the use of AI in my Spotify profile, and I make sure to check the "AI generated content" box on every single YouTube upload. I'm not trying to hide anything from anyone. In fact, I reply to every comment on Instagram, YouTube, and other platforms, confirming that yes, AI was used to generate the foundations of these songs. But I also re-iterate that it does not stop there. I'm not trying to build an AI agent that just spits our music without any human interaction and feeling.

Again, here's the thing: for me, this project isn't about scaling or mass-producing low effort content. It's a personal form of creative expression. I'll be the first to admit that I can't play a guitar or sing to save my life. But I do know what I like, and I've learned how to use AI tools to bring that vision/feeling to life. My thesis is that people will be (or are already) fed up with low effort trash AI stuff. But when used creatively, it's an insanely powerful tool.

After the AI generates the basis for a song, I spend hours (for some songs days) in RX11 and other software, reducing noise and refining the audio (the output quality is often times not even at the "six fingers" stage of image generation AI, if you know what I mean). That's also why I don't put "AI Generated" for example in the title of the Videos or anything. Because in my mind, it would imply that I just hit generate, add the video, and upload it to Youtube - which is simply not true. My goal is to "infuse" (for lack of a better word) each track with genuine emotion, if that makes any sense. It is simply a new way of working with these things. I do truly believe that we're on the cusp of some mind-blowing advancements in AI-assisted music creation (Ai in general but here we are talking music), and I'm excited to be a part of that in some ways.

So while I appreciate the critical lens through which you viewed my work, I want to assure you and your blog readers that my intentions are good. This is a fun, challenging, and deeply rewarding project for me - a way to push my personal boundaries and a test of what's possible when human creativity and AI join forces. Okay, this sounded more pompous than planned, but I guess you get the point. Also, it does not mean that everybody likes it, nor that the song structure might not be "weird" at times due to a glitching AI. Sometimes I can repair it afterwards by glueing the song differently together in FL Studio - sometimes I keep it. Music is something that is very subjective, and while it might sound cheesy to some, others might like it and call it catchy. Just as a thought.

In any case, thanks again for sparking this conversation, and for encouraging your readers to engage with my content in a respectful manner - because there is already a lot of hate about it out there (which I can partially understand, given the state of the music industry, but this is another bigger discussion that goes beyond the scope of this comment).

Cheers,

Jay