Several days ago, a VC-funded company called OpenAI released a public preview of ChatGPT. ChatGPT is a cutting-edge language model capable of fluently answering a dizzying range of factual questions, as well as performing certain creative tasks: clobbering together short essays and computer programs, constructing inoffensive poetry, or imitating the speaking style of well-known personalities.

Some pundits see ChatGPT and its ilk as the point of technological singularity: a sign that machines reached near-human cognitive skills, and are about to profoundly reshape societies — or even lead us to our doom.

Although I share some of their fears, I advise caution. The technology feels magical and disruptive, but we felt the same way about the first chatbot — ELIZA — and about all the Prolog-based expert systems that came on its heels. This isn’t to say that ChatGPT is a dud; it’s just that the shortcomings of magical technologies take some time to snap into view.

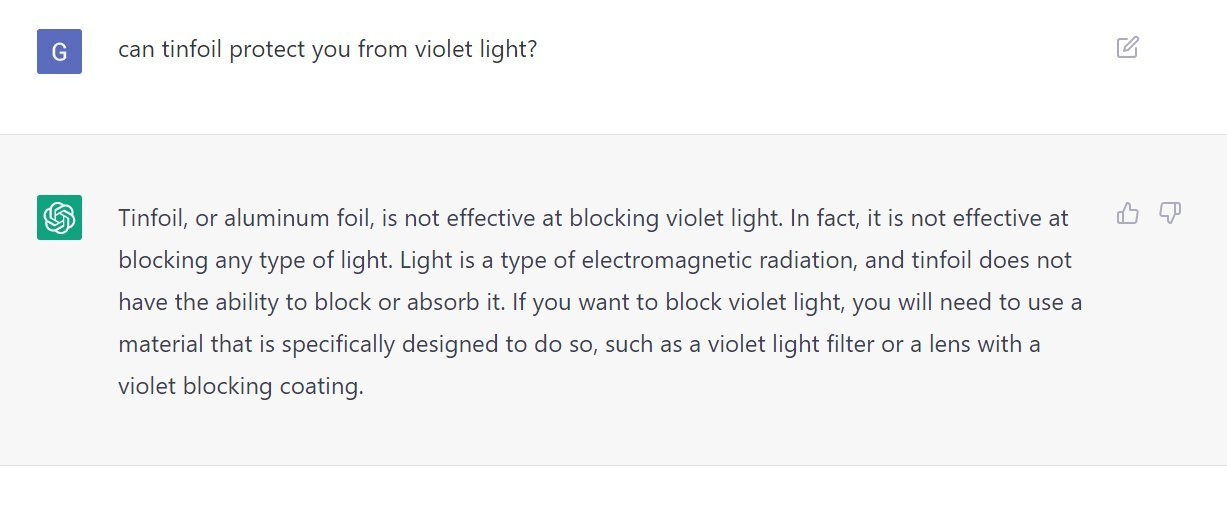

The models are, in essence, statistical predictors of what language tokens are most likely to follow a particular prompt. Given a sufficiently vast data set, their output can be stunning, even for seemingly novel queries. That said, the systems can be thrown off by topics that haven’t been discussed at length on the internet; in such circumstances, they produce human-like answers, but can display an utter lack of common sense.

Another problem is that as a consequence of a training process that selects for responses favored by human raters, many of ChatGPT’s answers make the model sound authoritative even if there is no substance to what it’s about to say. Difficult questions may be handled by simply reciting the definitions of the underlying terms; utterances such as “in summary”, “depending on specific factors”, or “due to the challenges and limitations involved” crop up a lot as well. The lines between useful knowledge and linguistic parlor tricks are blurry at best.

Nevertheless, in a casual conversation, it’s difficult to pinpoint any fundamental and consistent failing that sets ChatGPT apart from “true” human cognition. The big philosophical question is whether we underestimate how much information is available on the internet, and how little actual thinking is needed for a large index to appear human; or whether there really isn’t a whole lot more to our brains to begin with, and you can outsmart humans with a web crawler and a GPU. I suspect it’s the former, but I’m not making bets.

Even if ChatGPT doesn’t take over the world, such technologies will likely replace traditional web search. If so, the trend will probably make the internet less open. There’s little reason to publish novel content for free if instead of being seen by humans, it’s going to be regurgitated without attribution by an ML algorithm.

I also worry that the models will harm us in another way: by making it infinitely scalable to carry out spear phishing campaigns, simulate grassroots support for products or brands, or sway public opinion with automated political discourse. We will survive, but the internet as we know it might not.

Light LLM punditry continues here.

I’ve just asked ChatGPT the following question:

Is there a reason to publish content for free only to have it regurgitated without any attribution by an ML algorithm?

Here is the answer:

There is no inherent reason to publish content for free only to have it regurgitated without attribution by an ML algorithm. However, some people may choose to do so for a variety of reasons. For example, they may want to share information or ideas with a wide audience, and they may not be concerned with receiving attribution for their work. Additionally, publishing content for free and allowing it to be used by others, including ML algorithms, can help to promote the dissemination of information and ideas, which can ultimately benefit society as a whole.

It’s a very interesting post. More than the bots becoming closer to humans in their behavior, the real concern in my opinion is about increasing number of people who get emotional with technologies. We already come across teens who commit suicides because their parents temporarily deprive them of mobile phones, video games, or prevent them from social media activism, etc. In addition, we make movies like “Her” with a very emotional story in which a man and a mobile OS fall in deep and intimate love, and there’s a sign when these movies become largely accepted and liked, and worse, followed. That’s the real concern. So I’m the world where hype control our ability to think, we are already the real threat, more than technology.