There were no ancient computers and it's fine

A conservative take on the history computing devices.

Last August, in an article about the history of the calculator, I opened with the following quip:

"I find it difficult to talk about the history of the computer. The actual record is dreadfully short: almost nothing of consequence happened before the year 1935. We keep looking for a better story, but we inevitably end up grasping at straws.

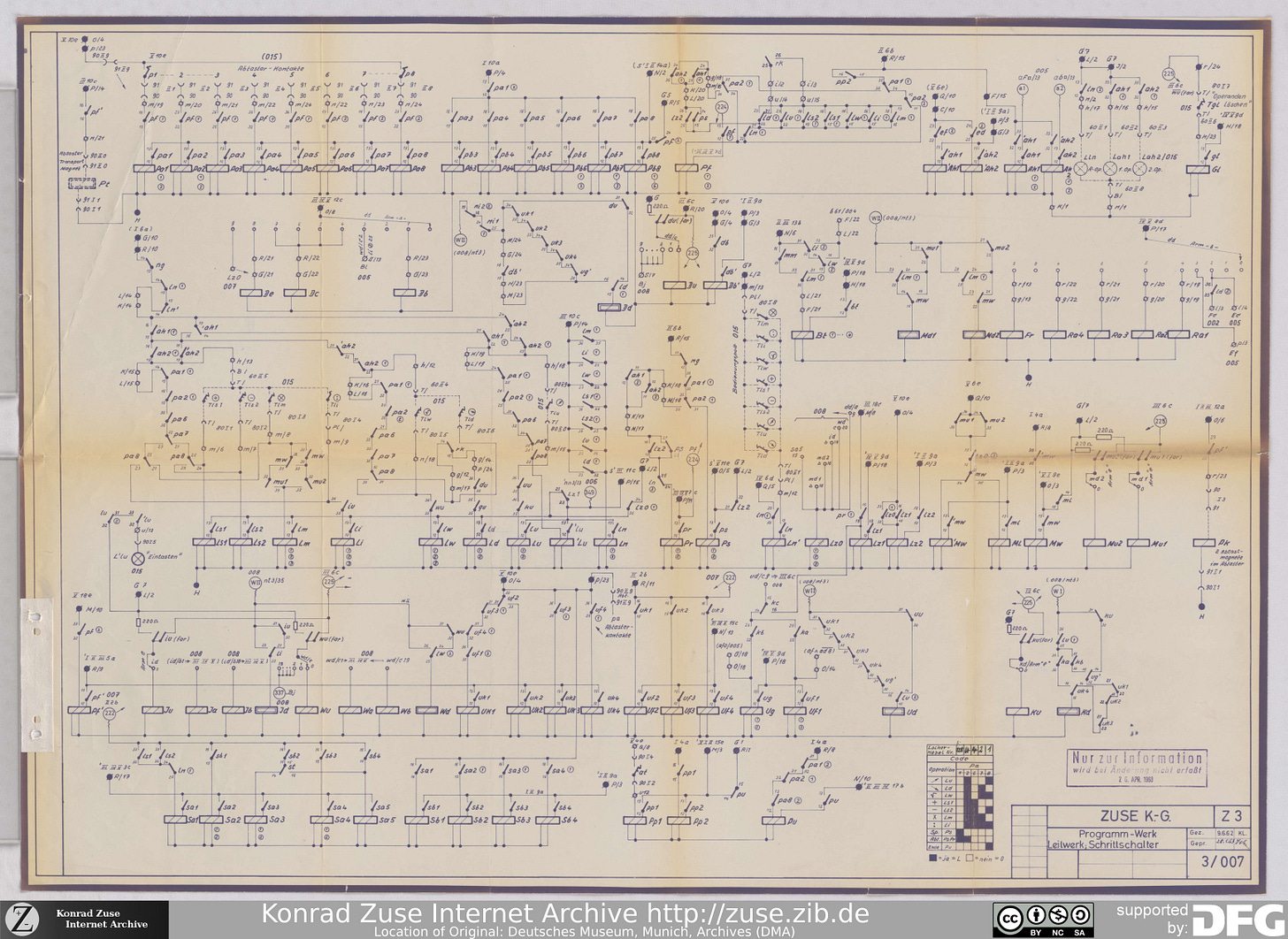

Just look at what we’ve done so far. The “father of the computer” is no longer Konrad Zuse (Z1, 1938) or John Mauchly (ENIAC, 1943). Somehow, we pivoted to Charles Babbage — a 19th century polymath who never constructed such a device, and had no luck inspiring others to try. Not content with this injustice, we also turned “computing” into a meaningless word. On Wikipedia, the timeline of computer hardware includes mechanical clocks, dolls, weaving looms, and a miniature chariot from 910 BCE. It’s historical synthesis run amok."

My point was simple: if a cuckoo clock or a differential gear qualifies as a “proto-computer”, what doesn’t meet the bar? Almost any man-made tool performs some sort of a calculation. Let’s take a crowbar: it’s an instrument for multiplying force by a preset amount. Sure, it might not be Turing-complete, but it’s getting there!

I jest, but I wasn’t arguing for semantic purity just for the sake of it. My concern was that when we paint with overly broad strokes, much of the important detail is lost. Consider that Charles Babbage — the seemingly undisputed father of modern computing — had zero actual impact on the development of the computer; it can be quite illuminating to ponder why. Heck, even Alan Turing and John von Neumann — the two intellectual titans who laid the theoretical groundwork for computer science — probably weren’t essential to getting the underlying tech off the ground.

What is a computer, anyway?

Perhaps my beef is with the terminology itself: the word “computer” is imprecise and its meaning has evolved over time. That said, in contemporary usage, there’s no doubt that it means something more than a differential gear, an abacus, or a slide rule.

Yet, absent a clear definition, many historians apply the term to just about any calculating device they see. In this view, we’ve been using computers for millennia. The designs ranged from simple mechanical registers (such as the abacus), to automated lookup tables (slide rule), to clockwork contraptions such as the four-operation calculator pictured above. Move over, Charles Babbage: the general operating principle behind that last device has been known at least since the 1600s — although it wasn’t until the late 19th century that we learned how to mass-produce such mechanisms.

Again, it’s not that I wish to pointlessly debate semantics; my problem with this approach is that it obscures the nature of the revolution that happened after 1935. A better definition of a computer would include not just the words “designed for calculation” but also “programmable.” In other words, the device should consist not only of an arithmetic logic unit (ALU), but also a sequencing mechanism that activates different subsystems — and passes data around — according to a flexible, user-specified plan.

By that standard, all the non-programmable calculating devices — from notched sticks to the Atanasoff-Berry “computer” of 1942 — ought to be disqualified. The first true computers would probably be the creations of Konrad Zuse, followed by Howard Aiken’s Harvard Mark I; both of these designs cropped up in the late 1930s.

Some computer science nerds might also be tempted to add “Turing-complete” to the list of requirements. By that criterion, the ENIAC — constructed by John Mauchly and J. Presper Eckert in 1945 — gets to claim primacy. That said, it’s a wonky metric: many devices that are Turing-complete aren’t practical computers. Conversely, Konrad Zuse’s Z3 — although not 100% Turing-complete in the conventional sense — was unmistakably the real deal.

So… how did computers come to be?

The popular answer to this question is rather hand-wavy. As noted before, you learn about Charles Babbage as the inventor of the programmable computer; and then Alan Turing and John von Neumann as the duo who teamed up (?) to make Mr. Babbage’s dream come true. Such pervasive is the cult of the three personalities that even in the Wikipedia article on Harvard Mark I, Mr. Babbage and Mr. von Neumann are name-dropped before any mention Mr. Aiken, the actual inventor of the device.

Of course, this story doesn’t quite add up. Charles Babbage was a successful designer of advanced and elegant mechanical calculators, but such devices existed long before his time. So did commercially-successful programmable mechanisms, notably including the punch-card-operated weaving loom designed by Joseph Marie Jacquard in 1804.

The bottleneck wasn’t conceptual; it was technical. We knew how to do calculation and we knew how to do the sequencing of operations; the challenge was the effortless propagation of data from one portion of the device to another. This was nearly impossible to do with sufficient flexibility, reliability, and scalability in the domain of sprockets, cams, and pawls. It’s the reason why Mr. Babbage’s Analytical Engine proved to be nothing but a pipe dream — and why even today, we don’t see hobbyists with CNC mills cranking out copies of his grand design.

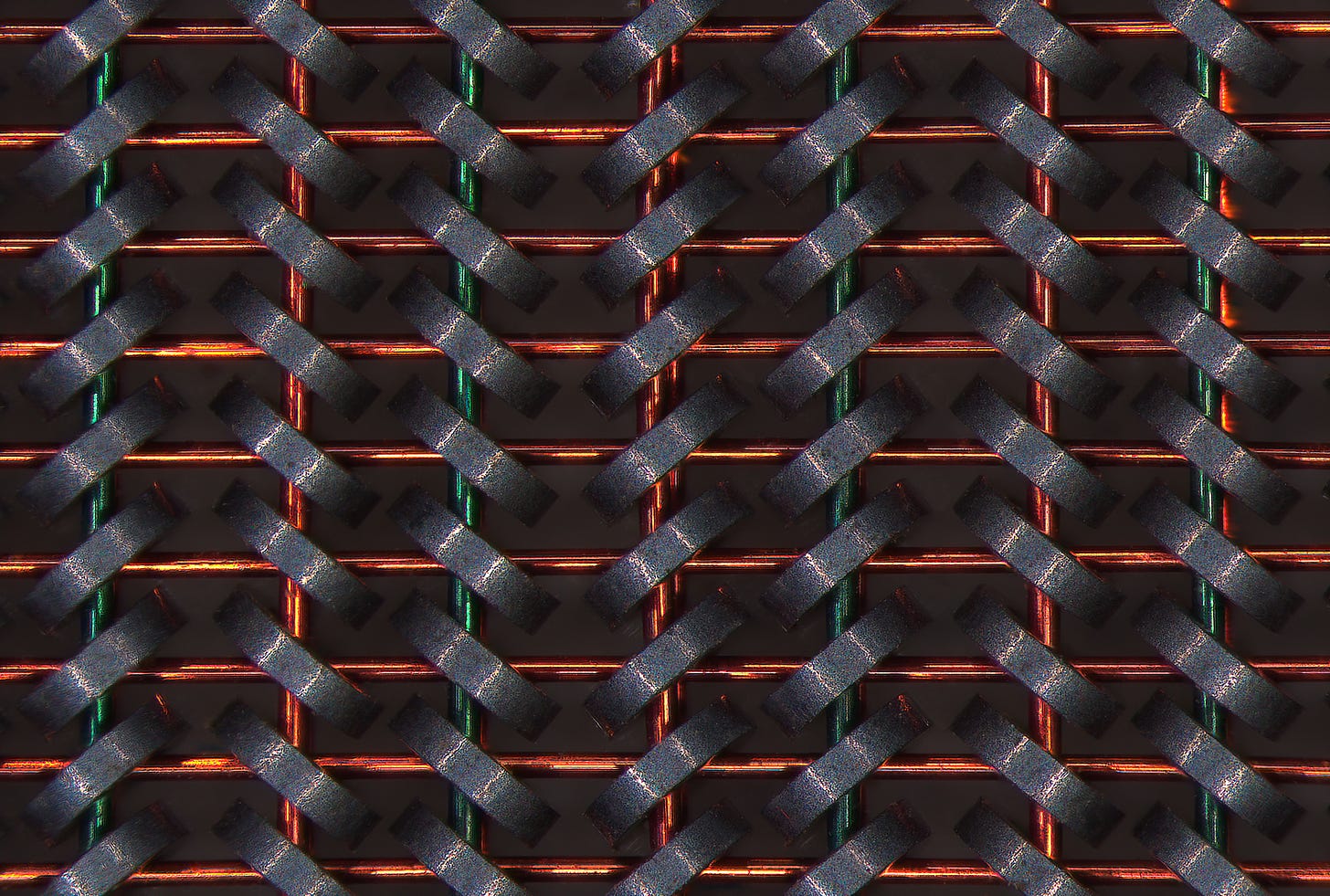

It follows that some of the most important names in early computing might not be Charles Babbage, Alan Turing, or John von Neumann — but the people who came up with early forms of electronic memory and thus made it simple to store and propagate data within increasingly complex calculating devices. In particular, the inventors of the humble yet enduring flip-flop circuit — William Eccles and Frank Wilfred Jordan — deserve far more recognition than they have ever gotten for their work.

Your point being…?

Well, that’s a good question! Perhaps it’s this: in a world where the era of programmable computers begins with Zuse’s Z1 or Harvard Mark I, there’s still plenty of interesting stories to tell. In a world where it all goes back to the wheel and the pointed stick, the actual challenges and the ingenuity that went into overcoming the issues is easy to miss.

Of course, I get it: we want to be inspired. We imagine Mr. Babbage as a misunderstood Victorian scientist in the mold of Nikola Tesla. We lament the fate of Mr. Turing, a WWII hero betrayed by his country. We look up to Ada Lovelace, a role model for women engineers around the globe. These are good stories; we should keep telling them. In comparison, Konrad Zuse’s Nazi-era biopic might not be a shoo-in.

👉 For more of my articles about electronics and computing history, click here.

I write well-researched, original articles about geek culture, electronic circuit design, and more. If you like the content, please subscribe. It’s increasingly difficult to stay in touch with readers via social media; my typical post on X is shown to less than 5% of my followers and gets a ~0.2% clickthrough rate.

Early to mid-20th century automatic telephone exchanges, were some of the largest programmable machines, besides computers, to ever be manufactured. They were one of the first embedded applications computers were applied to, as well (1960s). Claude Shannon showed in the 1930s how Boolean logic could manipulate switching electrical circuits symbolically. This was in the quest to simply the telephone network and its millions upon millions of automatically switched relays. It turned out to be directly applicable to designing computers. The drive to connect people - as an economic force and a social force - and to do so efficiently and at scale - through telegraph and telephone systems -- is a major chapter in the story of where computers came from.

While not exactly ‘ancient,’ I believe that typesetting machines were an example of early (pre-digital) computers. I actually wrote a paper about it in American Printing History Association’s journal _Printing History,_ issue 21, 2017.

https://johnlabovitz.com/publications/The-electric-typesetter--The-origins-of-computing-in-typography.pdf