In the previous article, I discussed the challenges of interfacing a full-color display to an 8-bit microcontroller. This made me reflect on how some of the technologies I grew up with — and then swiftly cast aside in favor of superior alternatives — are now making a comeback as fashion trends among the youth.

Given the short timescales involved, most of the retro chic stays true to the source material; for example, digital “VHS look” filters popular on social media usually look like the real thing: simulated video head tracking issues, muted contrast, blurry edges, and lots of color bleed.

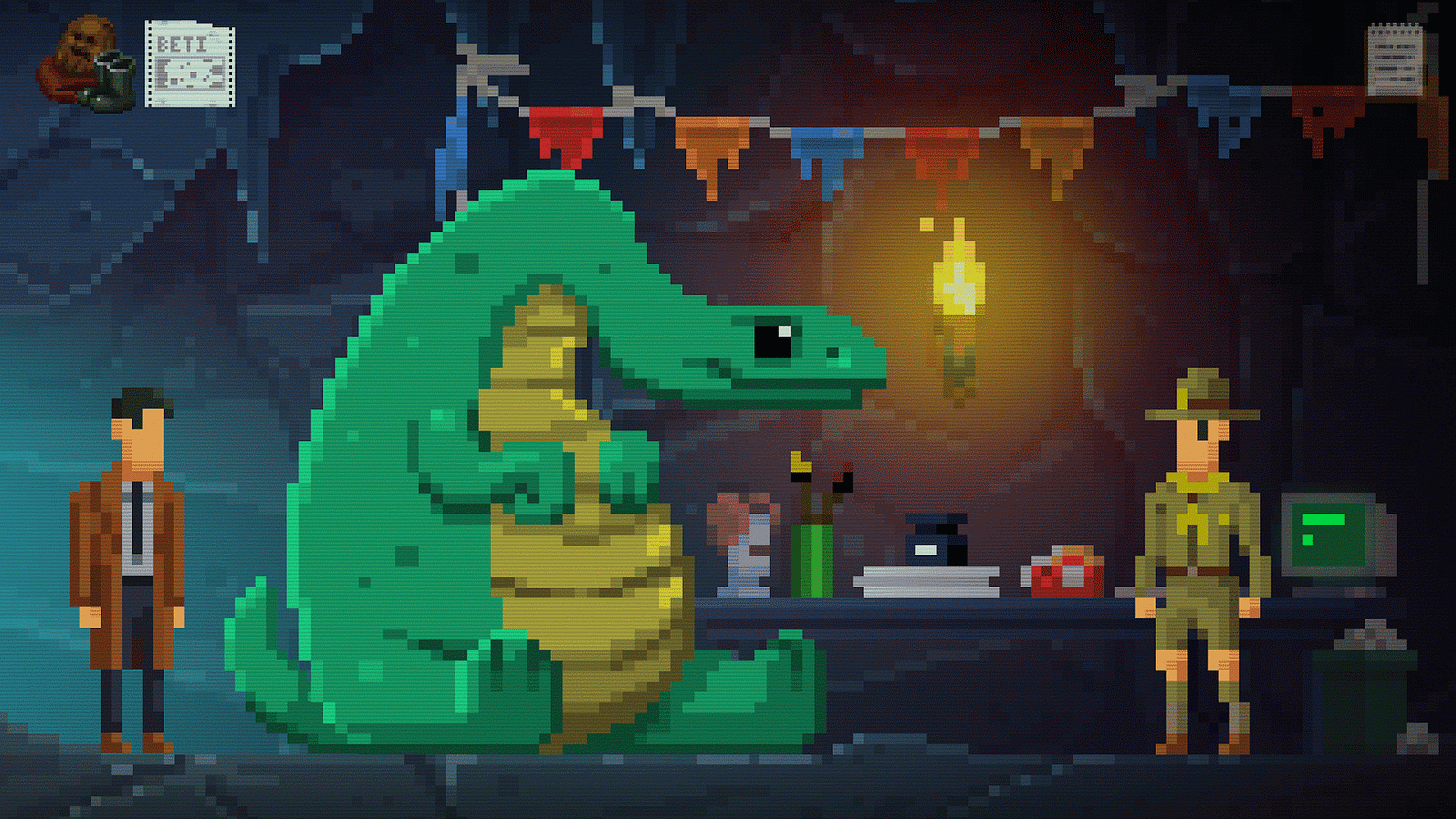

But then, that kind of historical accuracy is decidedly absent in the “8-bit” aesthetics that enjoy resurgence in computer game design:

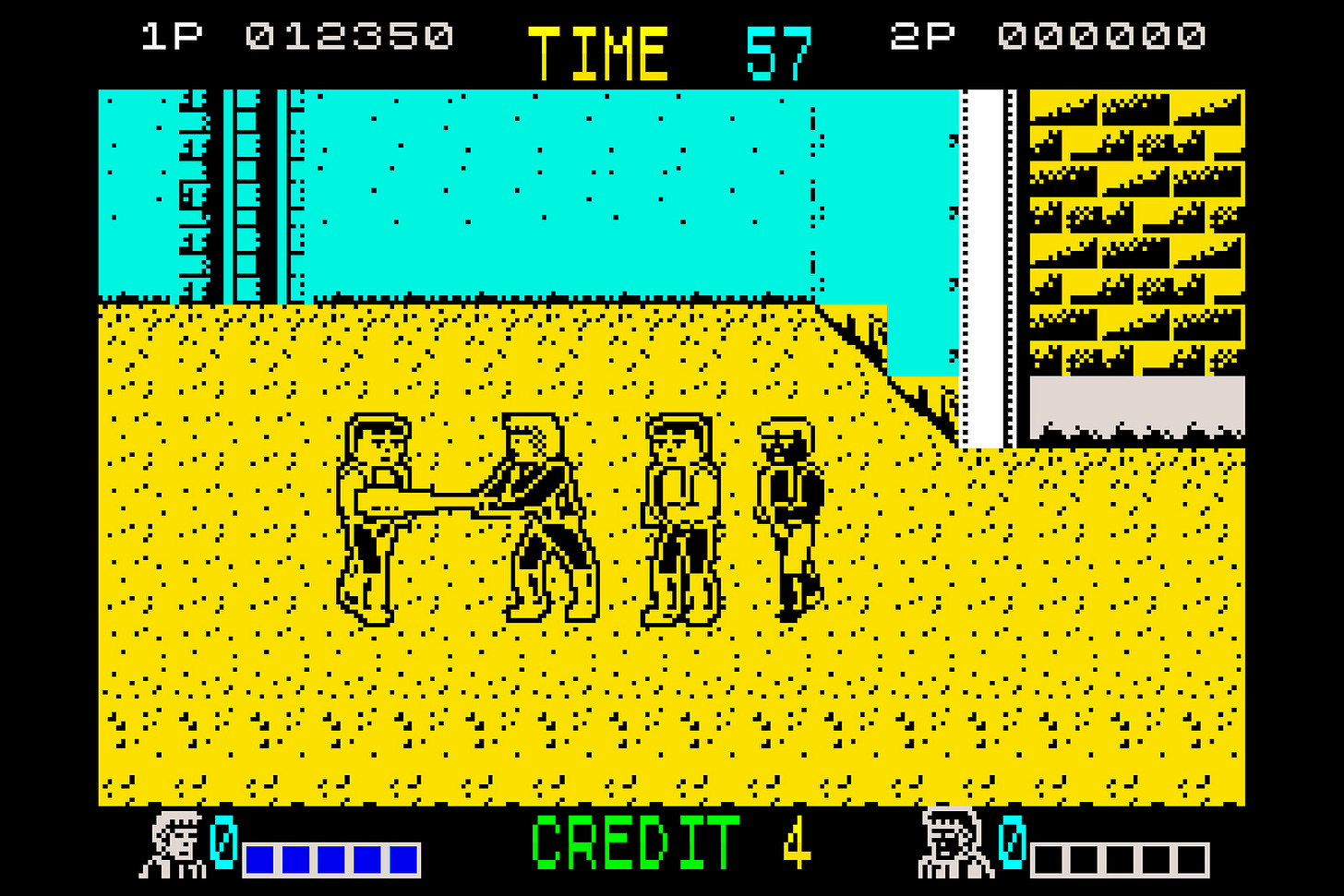

With exaggerated pixel sizes against the backdrop of pleasing color gradients, such visuals — present not only in indie adventure games, but in blockbusters such as Stardew Valley, Terraria, and Noita — look nothing like their namesakes. The games we played in the 1980s looked more like this:

There’s nothing fundamental about 8-bit computers that makes them ill-suited for drawing or animating beautiful pixel art. The problem in the 1980s wasn’t the number of bits that made up a CPU register; it was memory. A monochrome representation of the 256×192 screen on ZX Spectrum could be stored in 6 kB; the same image in full color would take up 144 kB, far more than the computer had in the first place.

In this particular instance, the engineers solved the problem by storing a high-resolution monochrome bitmap, and then adding a 32×24 attribute overlay that determined the display color for zeroes and ones across the entire underlying 8×8 pixel block. You could pick from eight hues and two intensity values. Forget gradients: with an effective color depth of 1.125 bits per pixel, it was difficult to cram more than two colors into an animated sprite.

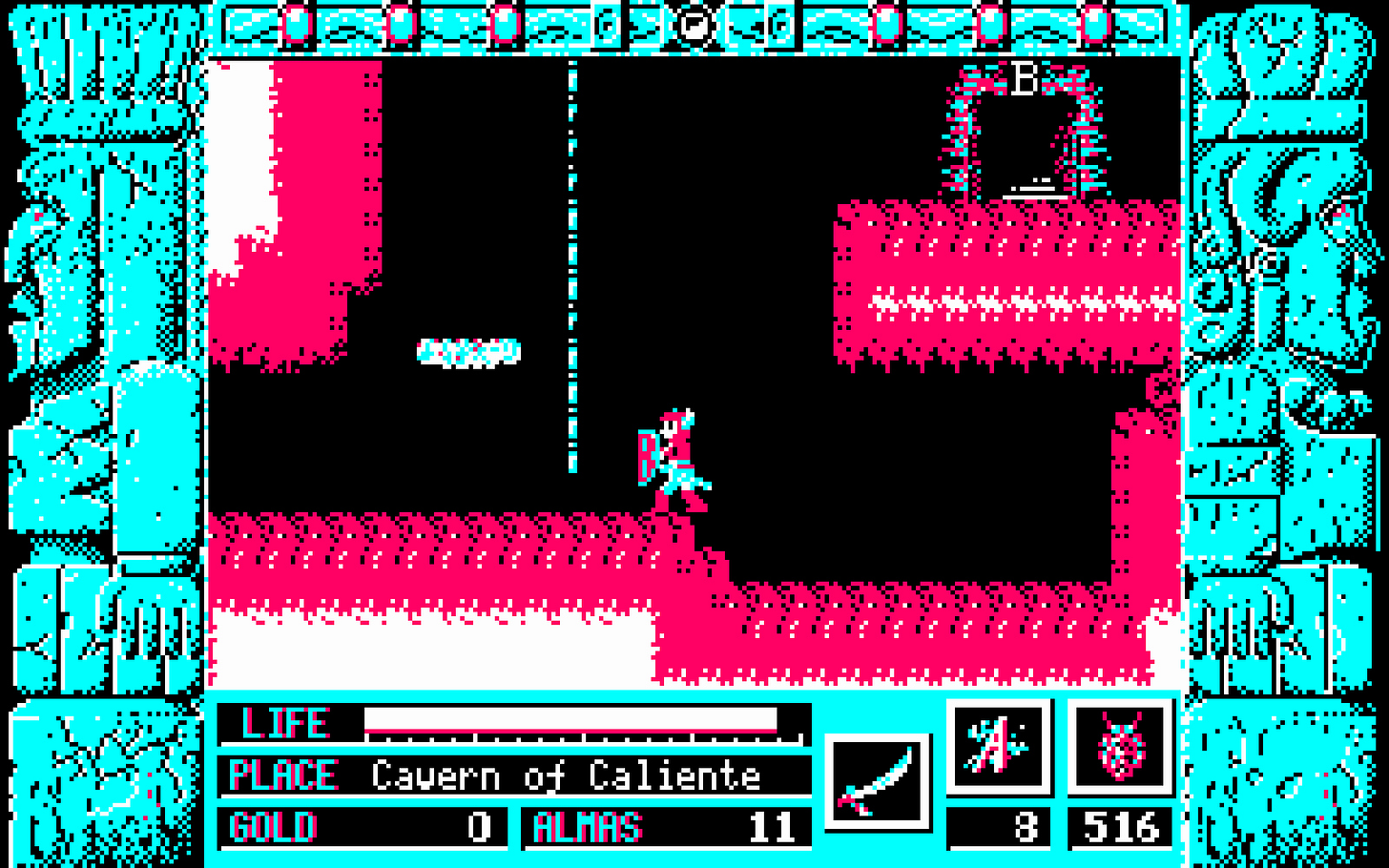

Tellingly, the same memory constraints also plagued early 16-bit systems; for example, the Color Graphics Adapter (CGA) cards used in IBM PC XT and AT computers supported 320×200 graphics at two bits per pixel. With four colors to spare, this screenshot from a CGA game runs the entire gamut:

What is now known as the “8-bit look” is actually reminiscent of computer graphics seen in the latter days of 16-bit devices, when memory was more plentiful. Canonical examples include Amiga (512 kB of RAM), Super Nintendo (192 kB), or IBM PS/2 with a VGA card (256 kB of dedicated video memory).

In contrast to memory, the advances in CPU power did relatively little for the visuals in traditional game genres such as side scrollers; that said, the tech eventually permitted new gaming paradigms to flourish. This notably included 2.5D first-person shooters, where the entire active game area had to be recomputed in between frames — so you needed fast arithmetics and asynchronous memory transfers (DMA) to pull it off. The first truly successful example of the genre was Wolfenstein 3D, debuting in 1991 for 80286-based systems with VGA cards.

The rest, as they say, is history.

It's a little bit unfair to compare old CGA-style graphics in terms of how many colors can be used *per pixel*. Old style CGA graphics often depended on the blurryness of the pixel when displayed on the CRT screen, so sometimes more than 1 pixel was used to produce a color at some specific spot. CGA graphics was abysmal when looking at it on LCD, but with the right hardware, everything falls into place. Of course, it's still far from 16-bit graphics, but let's compare with our eyes as the POV, not the pixel.

https://www.youtube.com/watch?v=niKblgZupOc (from 4:54)

Yeah, good point. I think those pictures look particularly bad though? It was possible to use custom characters as tiles to draw nicer things:

https://www.c64-wiki.com/wiki/Ultima_III_%E2%80%93_Exodus