Regular readers know that I try not to assume prior knowledge of calculus in my articles on electronics. I think that even among geeks, relatively few folks are fluent in it. Many college calculus classes are a rapid-fire of poorly-explained formulas that take a long time to memorize — and only a moment to forget. Sometimes, the route is unavoidable; but most of the time, EE articles lean on it for no reason at all.

Today, I’d like to take an angry swipe at the derivation of the formula for the root-mean-square voltage (Vrms) that is currently the #1 hit on Google:

I think we can do better than that.

Hol’ up, what’s root-mean-square voltage?

This section assumes familiarity with voltage and resistance. If you need a refresher, review this article first.

Every now and then, we want to figure out the power that’s dissipated when we supply a voltage-based signal to a known resistance. A good example is sizing the amplifier circuitry for driving an audio speaker. Modern solid-state amplifiers generally deal with voltages. Speaker coils have a specified resistance and a power limit expressed in watts.

In the article linked above, we derived the following formula:

This equation makes sense if the voltage is constant — but what if the signal changes over time?

To answer that question, let’s study a toy example of a 10 Ω load and a supply voltage that changes in three discrete steps of equal duration: 1 V, 10 V, back to 1 V. In this setup, each time slice is essentially its own “steady state” scenario, so we can split the calculations accordingly:

With this done, we can compute a simple arithmetic mean to get the average power dissipated by the load over time:

The result is correct, but this method of calculating the value is not always convenient. For example, a typical song consists of around 15 million audio samples; we can calculate the power associated with each sample, but then we’d need to redo the entire calculation to figure out the result for a different position of the volume knob. It would be better to find a single, synthetic DC voltage that works out to the same amount of power dissipation as the AC waveform. Equipped with this, we could model the effects of attenuating or amplifying the signal with more ease.

But how do we calculate that equivalent voltage? Is it just an arithmetic mean of the voltages in each time slice? Let’s continue with our three-voltage scenario and see if the numbers work out:

The results don’t match. The problem is the V² term in the power equation: the square of a sum is not the same as the sum of squares. To refine the approach, we can just solve the power equation for our toy example symbolically.

The component equations are P0 = V0 / R, P1 = V1 / R, and P3 = V3 / R, so:

Upon closer inspection, we can restate the result as:

The first half of the expression is just an arithmetic mean of the squares of voltages, sitting right where the V² part of the constant-voltage formula used to be. Another way to put this is that we’re squaring the original waveform first, and then calculating a standard arithmetic mean of that.

Anyway, let’s call that weird mean x and confirm that the math checks out:

But what is x in physical terms? It’s not a substitute for voltage in the original power expression; remember that it took place of the square of voltage (V²). To get the power-equivalent voltage we talked about, we need to calculate a square root of this value.

And that’s what “root mean square” voltage (Vrms) is: it’s the square root (R) of the arithmetic mean (M) of the squared waveform (S). In this case, Vrms = √34 ≈ 5.831 V. We can model the effect that halving the amplitude of the input signal would have on the dissipated power simply by dividing Vrms by two.

The more practical case of a square wave that alternates between two voltages — Vlo and Vhi — is analogous to the approach discussed earlier in this section, except that we only need to calculate the mean of two points instead of three:

But what about signals that change in a continuous way?

Vrms for sine waves

In analog electronics, we often work with sine signals — and that’s where Wikipedia usually hits you with calculus. Can we avoid this?

The answer is yes! As a reminder, to find the RMS of an arbitrary waveform, we need to calculate the square root (R) of the arithmetic mean (M) of the squared waveform (S).

We can start by squaring the waveform. The general equation for a sine wave signal of a given peak amplitude Vp is as follows:

Squaring the right-hand side yields:

The only part of the expression that changes over time is the sin²(…) function. Let’s use a new symbol — Sm — to represent its mean. This allows us to write the Vrms expression the following way:

Now, all we have to do is find Sm. To find the mean of any periodic waveform, we only need to consider a single cycle; the average of any repeated sequence is the same as the average of the basic repeating unit:

The period of sin²(...) can’t be longer than the period of the sine function before squaring; in other words, we can safely limit ourselves to parameters between 0° and 360°, which correspond to a full cycle of a non-squared sine or cosine. But this still means we have an infinity of continuously-changing values to average!

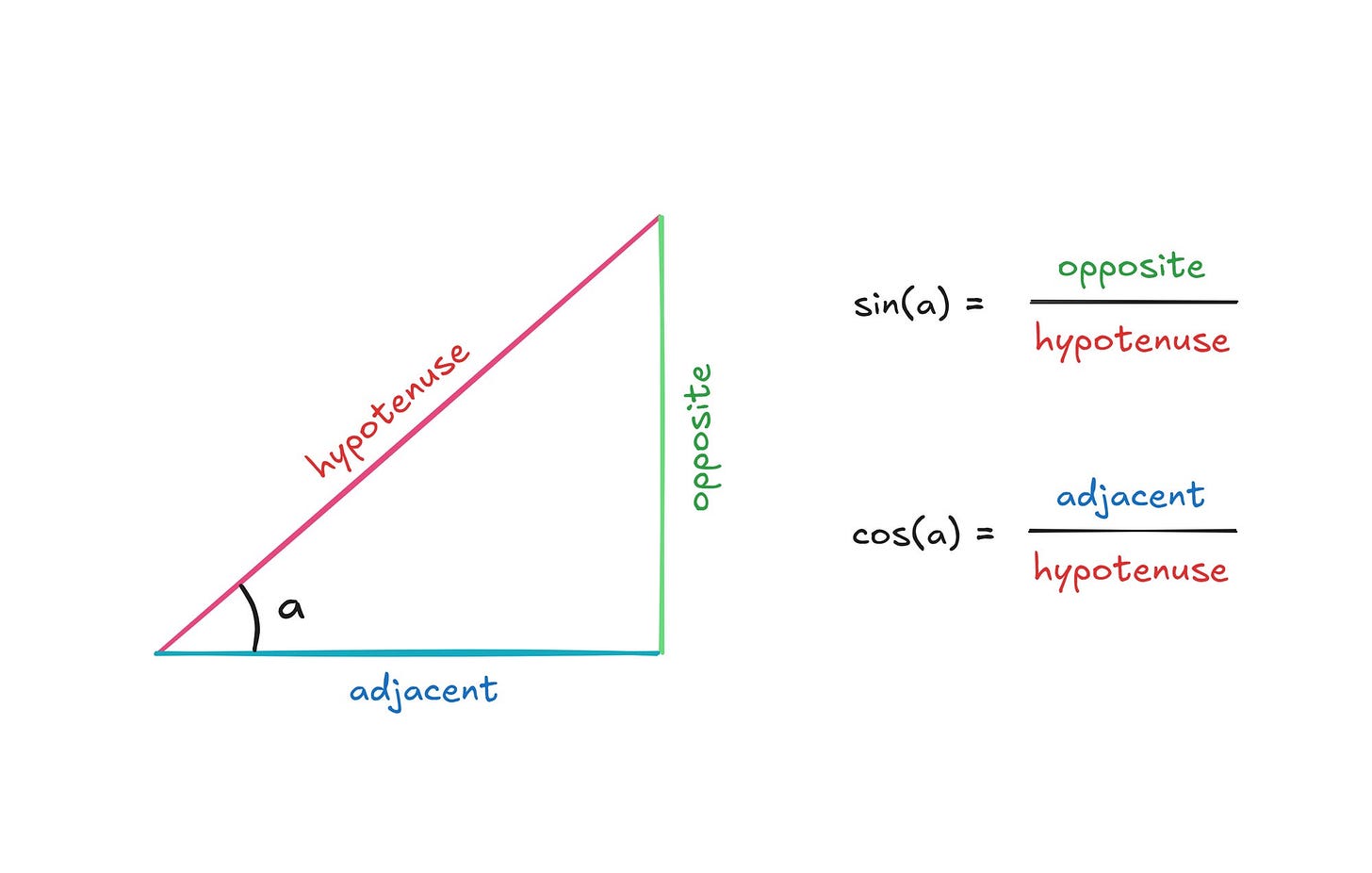

Luckily, trigonometry comes to the rescue. Let’s step away for a moment from the Vrms scenario and consider the general case of an ad-hoc right-angled triangle described by the usual trigonometric functions:

Let’s say that that the length of the hypotenuse is 1. Further, let’s say we know the angle a. It should be clear that the height of the vertical edge is equal to 1 · sin(a) and the length of the horizontal edge is 1 · cos(a).

This being a right triangle, we can also describe the relationship between its sides using the Pythagorean theorem:

In our thought experiment, we said that the hypotenuse is 1 and that the remaining sides are given by the trigonometric functions of angle a. If we plug these values in, we receive:

This universal rule — a relationship between the squares of sine and cosine — is called the Pythagorean identity.

Now, let’s circle back to the RMS conundrum. To explain the next step, imagine that you only put $5 bills in a piggy bank; it follows that the average value of the bills in the piggy bank is necessarily $5. In other words, the constraint placed on the constituent values carries over to the arithmetic mean.

Moments ago, we established that for every given a, the value of sin2(a) + cos2(a) is equal to 1. It follows that the average of sin2(a) + cos2(a) values across any range of α must also be 1:

As a matter of basic arithmetic, any long fraction like that be split into two shorter fractions that group the elements some other way, e.g.:

In this instance, it means that we can split out the mean of any number of sin2(a) + cos2(a) expressions into a sum of two independent means of sin2(a) and cos2(a) values:

We previously introduced Sm to denote the arithmetic mean of sin²(…); adopting Cm for cos²(…), we can simplify this new finding to:

Earlier on, we asserted that it’s sufficient to calculate the arithmetic mean of each function in the span from 0° to 360°; because the function repeats, the mean over any multiple of a full cycle will be the same as the mean over the basic repeating unit.

Both the sine function and the cosine function complete one cycle; they produce two identical waveforms, just offset in time. For every sin(…) = 0.5, there will be exactly one corresponding point where cos(…) = 0.5:

In fact, we can illustrate this by doing some advanced mathematical surgery on the cosine part:

Because the waveforms are essentially identical within our chosen analysis window, the full-cycle average of sin(α) is equal to the full-cycle average of cos(a). Squaring each value of sin(a) and cos(a) doesn’t alter this relationship: we end up with new curves, but they still line up and there’s a continued 1:1 correspondence between any two points on each. The period-mean of sin²(a) must be the same as the period-mean of cos²(a). This observation finally allows us to figure out the value of Sm:

In other words, we’ve established that the mean of the sin²(…) waveform between 0° and 360° is ½. Plugging this into the earlier Vrms equation, we get the universal formula for a 0 V-centered sine wave with a peak amplitude of Vp:

As they say in France, viola!

👉 For an application of RMS to modeling thermal noise and its relationship with statistics, check out this followup article. For a catalog of my other articles about electronics and math, click here.

I write well-researched, original articles about geek culture, electronic circuit design, algorithms, and more. This day and age, it’s increasingly difficult to reach willing readers via social media and search. If you like the content, please subscribe!

Beautiful analysis. Could you write a post for when V/I are out of sync? Please keep posting!

I enjoy your writing, but calculus often makes everything easier. Extensive algebra is far more difficult then that. I cannot be the only one who hated his professor's remark "an the rest is SIMPLE algebra."

Calculus is pretty much standard for university bound high school students these days. Outside of those studying humanities, it is a prereq for pretty much anything (physics, economics, finance, engineering, computer science,...). If anything, I used calculus, stats and probability all the time in my twenties, but what I never did is complete all the calculations. We have computers for that. I have an old TI-92 calculator I bought 25 years ago to be able to deal with non-simple math in random places.

I think calculus is pretty essential for clear thinking. It was the first truly elegant thing I learned in school. It is everywhere. I think we should be teaching it to more people. The US would be a better place if more people had a basic command of calculus, and statistics. I have spent most of my life trying to explain facts to important people, and the world would be a much better place if the individuals running companies and public sector agencies could understand the most basic tools used in operations, finance and technology.

If you will excuse me, I need to get off this horse. I need a stool for how high I am sitting.