Sir, there's a cat in your mirror dimension

Pets do the darndest things, especially if you teach them a bit of math.

A while back, we talked about the frequency domain: a clever reinterpretation of everyday signals that translates them into the amplitudes of constituent waveforms. The most common basis for this operation are sine waves running at increasing frequencies, but countless other waveforms can be used to create a number of alternative frequency domains.

In that earlier article, I also noted two important properties of frequency domain transforms. First, they are reversible: you can recover the original (“time domain” or “spatial domain”) data from its frequency image. Second, the transforms have input-output symmetry: the same mathematical operation is used to go both ways. In effect, we have a lever that takes us to a mirror dimension and back. Which of the lever positions is called home is a matter of habit, not math.

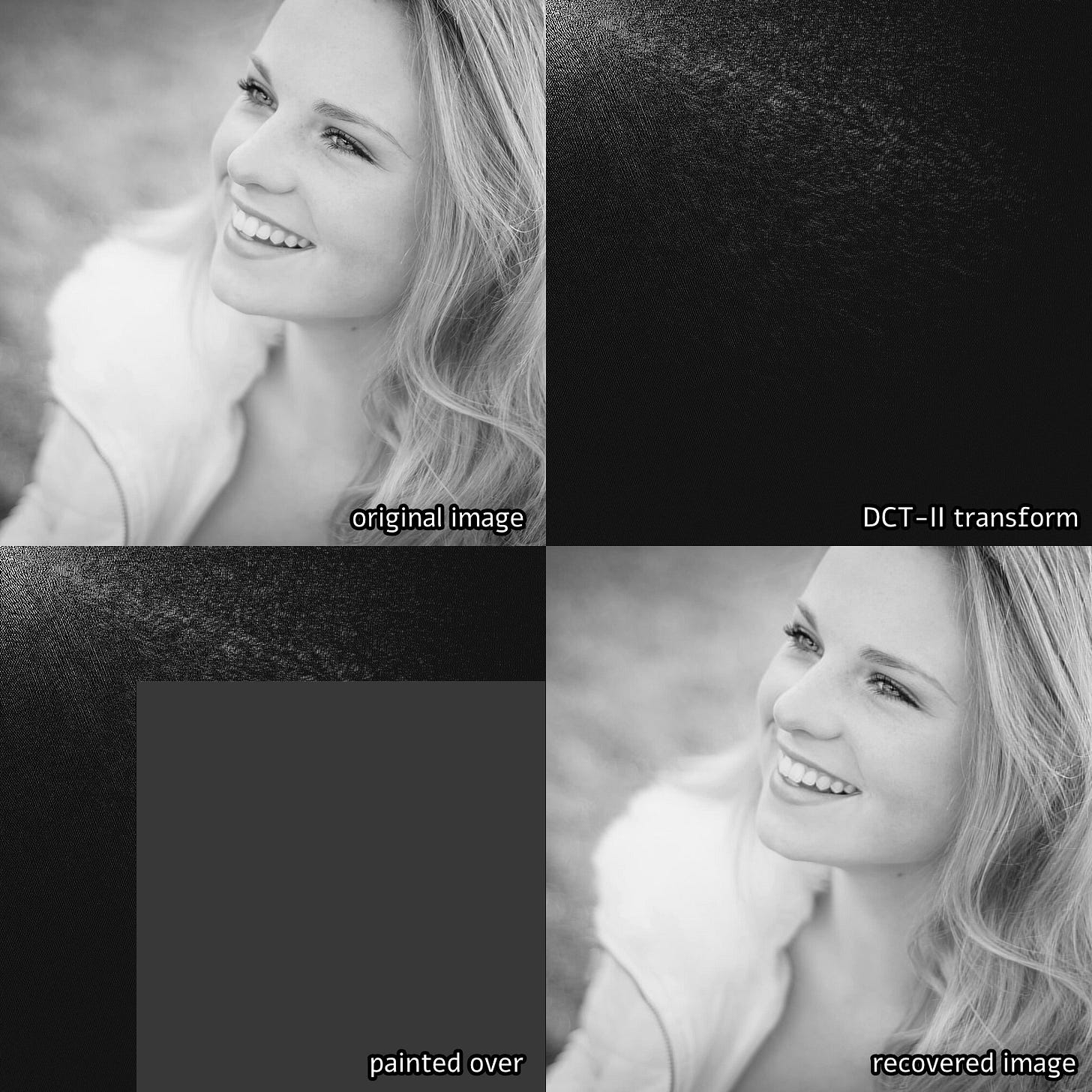

Of course, in real life, the distinction matters — and it’s particularly important for compression. If you take an image, convert it to the frequency-domain representation, and then reduce the precision of (or outright obliterate!) the high-frequency components, the resulting image still looks perceptually the same — but you now have much less data to transmit or store:

This makes you wonder: if the frequency-domain representation of a typical image looks like diffuse noise, if most of it is perceptually unimportant, and if the transform is just a lever that takes us back and forth between two functionally-equivalent dimensions… could we start calling that mirror dimension home and move some stuff in?

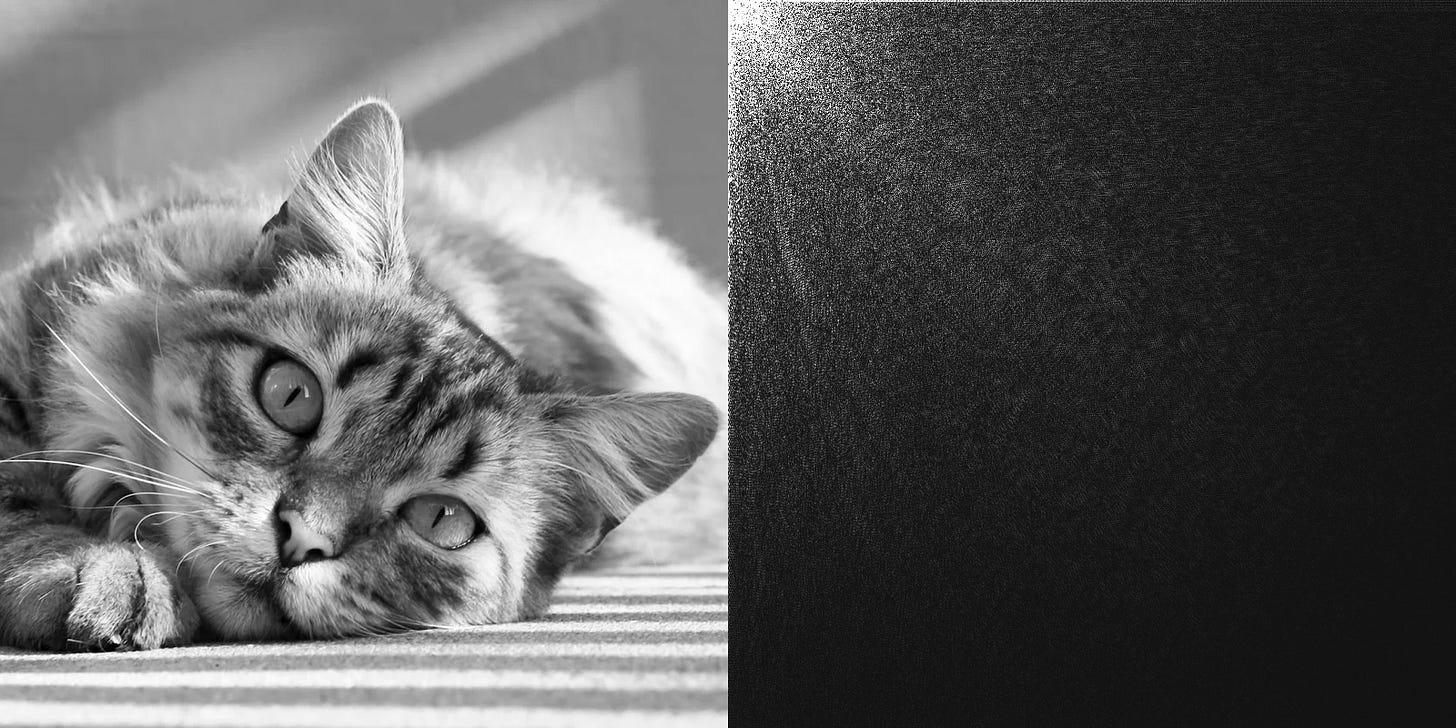

To answer this stoner question, I grabbed a photo of a cat and then calculated its frequency-domain form with the discrete cosine transform (DCT):

Next, I reused the photo of a woman from an earlier example and placed the mirror-dimension “cat noise” pattern over it, dialing down opacity to minimize visible artifacts:

The compositing operation is necessarily lossy, but my theory was that if the composite image is run through DCT to compute its frequency-domain representation, the photo of a woman would be decomposed to fairly uniform noise, perhaps easy to attenuate with a gentle blur; while the injected “cat noise” would coalesce into a perceptible image of a cat.

But would it?… Yes!

If you want to see for yourself, download the composite image and have fun. In MATLAB, you can do the following:

woman = imread("woman-with-cat.png"); colormap('gray'); imagesc(woman, [0 255]); pause(1); cat = dct2(woman); imagesc(imgaussfilt(cat, 1), [-4 4]);

Interestingly, the kitty survives resizing of the host document. Upscaling tiles the image; downscaling truncates it.

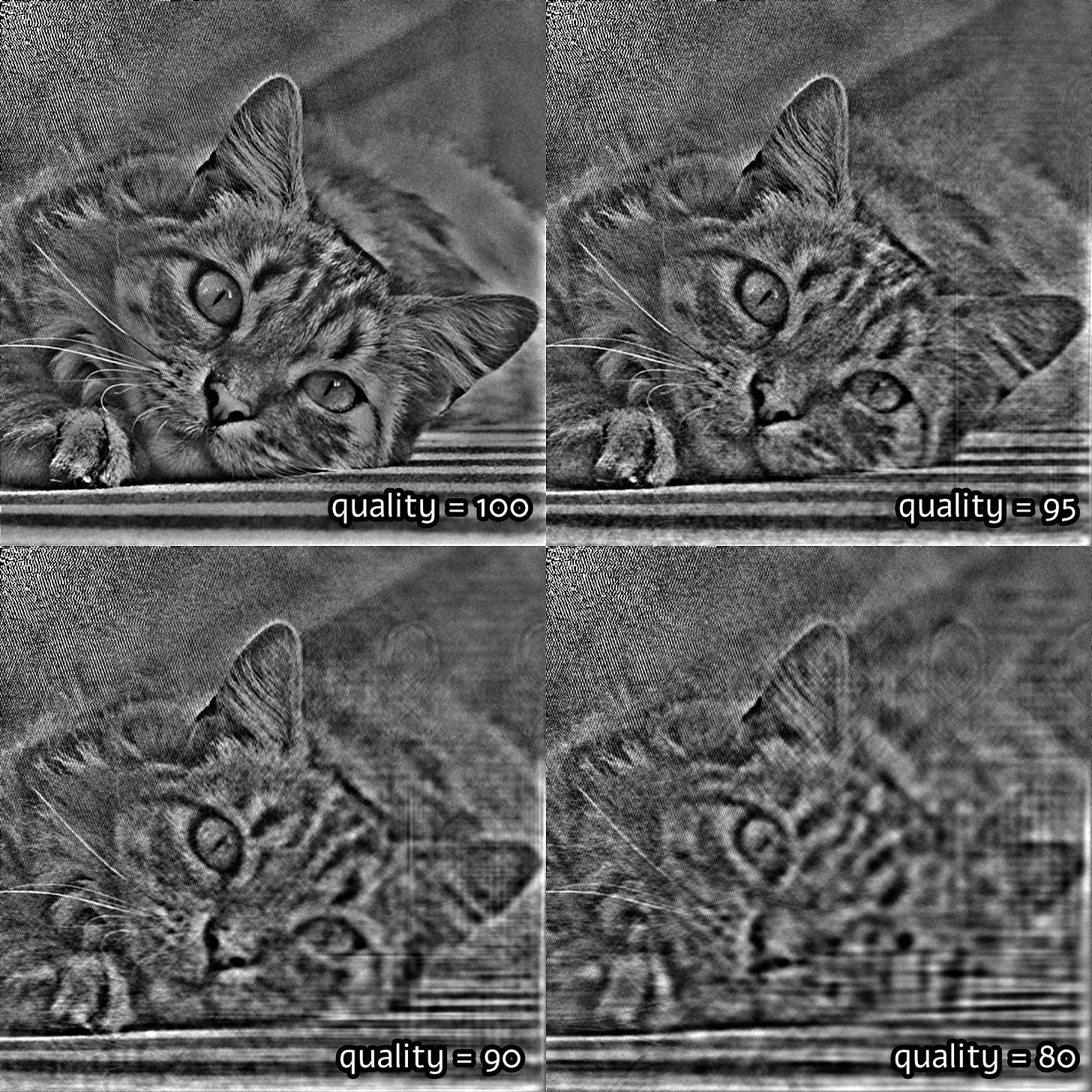

My lingering question was how badly the cat would get mangled by lossy compression; as it turns out, the impact is less than I expected. At higher JPEG quality settings, the image looks quite OK. As the quality setting is lowered, the bottom right quadrant — corresponding to higher-frequency components — gets badly quantized:

This visualization offers a fascinating glimpse of just how much information is destroyed by the JPEG algorithm — mostly without us noticing.

There’s plenty of prior art for using audio spectrograms for hidden messages, and some discussion of text steganography piggybacked on top of JPEG DCT coefficients. My point isn’t that the technique is particularly useful or that it has absolutely no precedent. It’s just that the frequency domain and the time domain are coupled together in funny ways.

👉 For more articles, see this categorized list.

I write well-researched, original articles about geek culture, electronic circuit design, and more. If you like the content, please subscribe. It’s increasingly difficult to stay in touch with readers via social media; my typical post on X is shown to less than 5% of my followers and gets a ~0.2% clickthrough rate.

Bonus content: the deterioration of a "standalone" frequency-domain cat for various JPEG quality settings:

https://vimeo.com/940487310/8a929a5eb5

I had some fun a while back playing with the phase information: https://www.brainonfire.net/blog/2022/04/28/fourier-image-experiments/

I'm still not exactly sure how the API calls I was making relate to the Fourier Transform I learned briefly in school; in particular, I'm a little unclear on how the 2D image is processed. It looks like one dimension is processed first, then the other, so you get anisotropic effects.