Radios, how do they work?

A brief introduction to antennas, superheterodyne receivers, and signal modulation schemes.

Radio communications play a key role in modern electronics, but to a hobbyist, the underlying theory is hard to parse. We get the general idea, of course: we know about frequencies and can probably explain the difference between amplitude modulation and frequency modulation. Yet, most of us find it difficult to articulate what makes a good antenna, or how a receiver can tune in to a specific frequency and ignore everything else.

In today’s article, I’m hoping to provide an introduction to radio that’s free of ham jargon and advanced math. To do so, I’m leaning on the concepts discussed in three earlier articles on this blog:

If you’re rusty on any of the above, I recommend jogging your memory first.

Let’s build an antenna

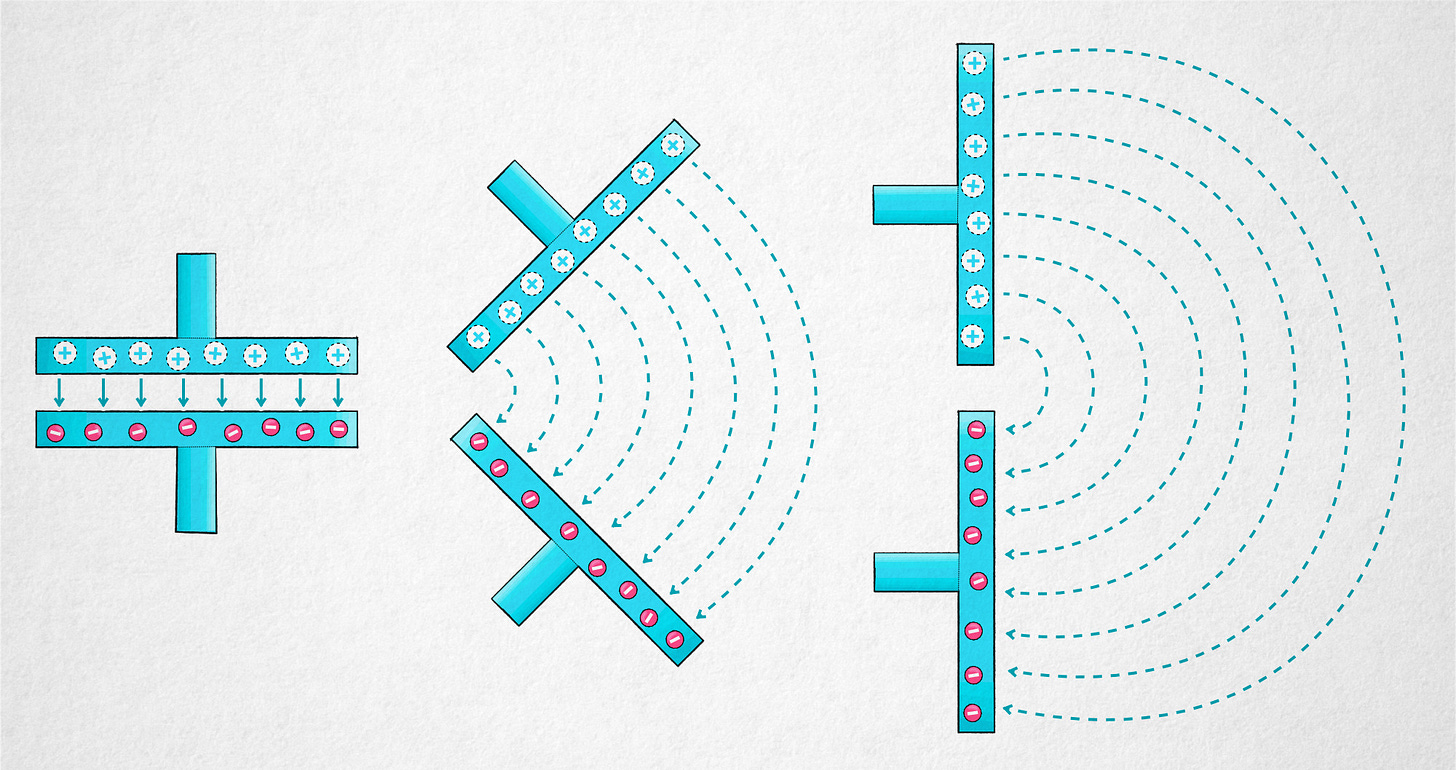

If you’re familiar with the basics of electronics, a simple way to learn about antennas is to imagine a charged capacitor that’s being pulled apart until its internal electric field spills into the surrounding space. This would be a feat of strength, but bear with me for a while:

Electric fields can be visualized by plotting the paths of hypothetical positively-charged particles placed in the vicinity. For our ex-capacitor, we’d be seeing arc-shaped lines that connect the plates — and strictly speaking, extend on both sides all the way to infinity.

An unchanging electric field isn’t very useful: if it’s just sitting there for all eternity, it doesn’t convey information nor perform meaningful work. If we push a charge against the direction of the field and then let it loose, the particle will fly away – but that’s just a matter of getting back the energy we expended on pushing it into position in the first place.

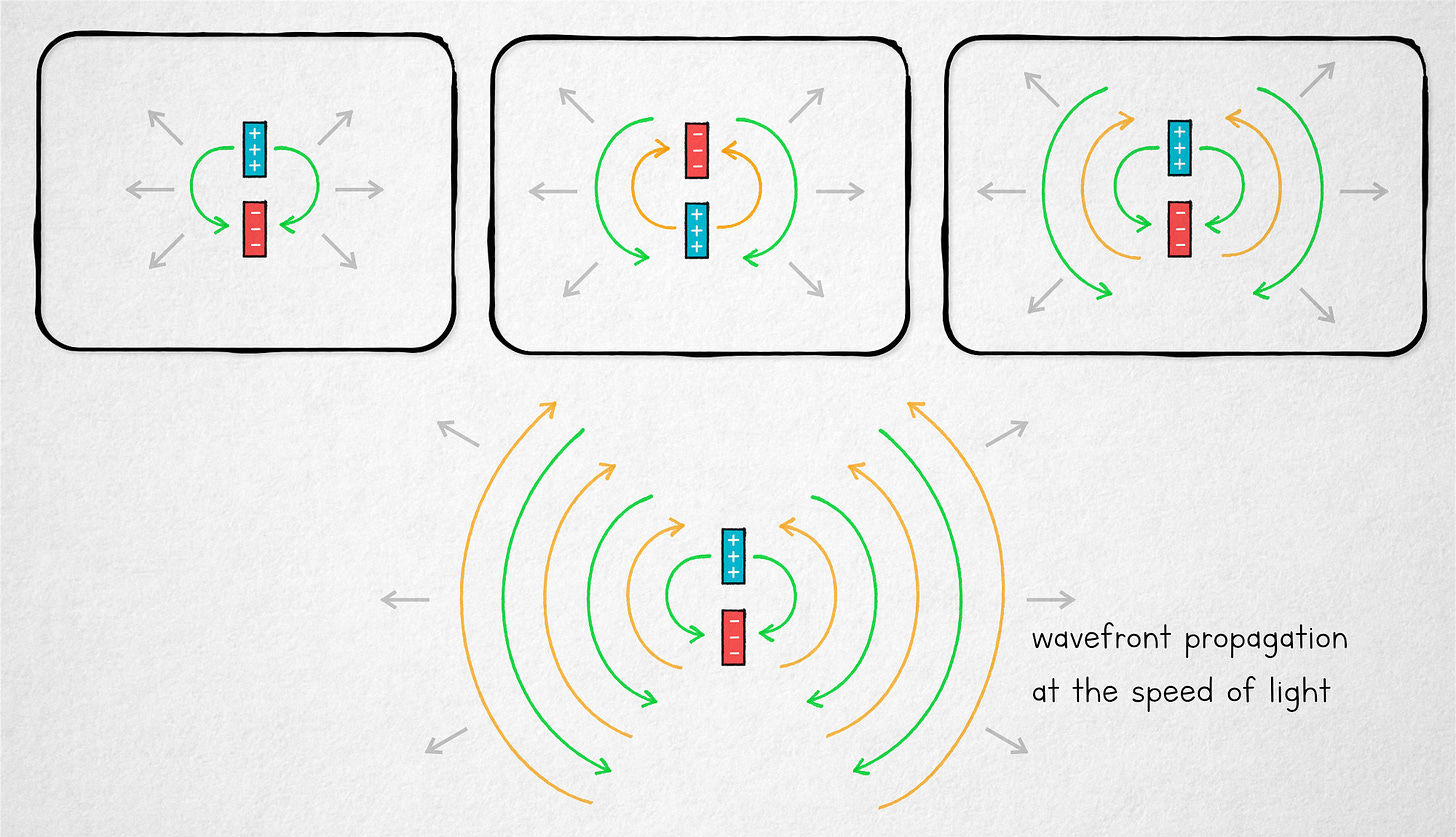

The situation changes if we start moving charges back and forth between the plates. This produces an interesting effect: a ripple-like pattern of alternating fields that are getting away from the ex-capacitor at the speed of light:

The color corresponds to the direction of the field - i.e., the pull that a charged particle would experience if placed in the vicinity: green stands for a “downward” field where the upper half of the antenna is positive and the lower half is negative; yellow is the other way round.

In the case of a static electric field of a charged capacitor, we could always get substantially all the stored energy back just by connecting the plates and allowing the charges to equalize. But in our new scenario, if the field is changing rapidly, then on the account of relativity, we can no longer directly recover the energy from the ripples that are getting away at the speed of light. That energy can only be transferred to downstream charges that may begin to move back and forth as they “swim” through the gradients of the incoming field.

It should be fairly evident that the amount of radiated energy increases with the amount of charges shuttled back and forth between the plates — the intensity of each ripple — and with the number of transitions per second (i.e., with the signal’s frequency).

A perfectly uniform waveform is still not useful for communications, but we can encode information by slightly altering the wave’s characteristics over time — for example, tweaking its amplitude. And if we do it this way, then owing to a clever trick we’ll discuss a bit later, simultaneous transmissions on different frequencies can be told apart on the receiving end.

An antenna that actually works

But first, it’s time for a reality check: if we go back to our dismantled capacitor and hook it up to a voltage-based signal source, the setup won’t actually do squat. When we pulled the plates apart, we greatly reduced the device’s capacitance, so we’re essentially looking at an open circuit; a pretty high voltage would be needed to shuffle a decent number of electrons back and forth. Without this motion — i.e., without a healthy current — the relativistic ripples pack very little punch.

The most elegant solution to this problem is known as a half-wavelength (“half-wave”) dipole antenna: two rods along a common axis, driven by a sinusoidal signal fed at the center, each rod exactly ¼ wavelength long. If you’re scratching your head, the conversion from frequency (f, in Hz) to wavelength (λ) is:

The third value — c — is the speed of light per second in your preferred unit of length. For example, for f = 200 MHz, λ works out to about 1.5 m (5 ft).

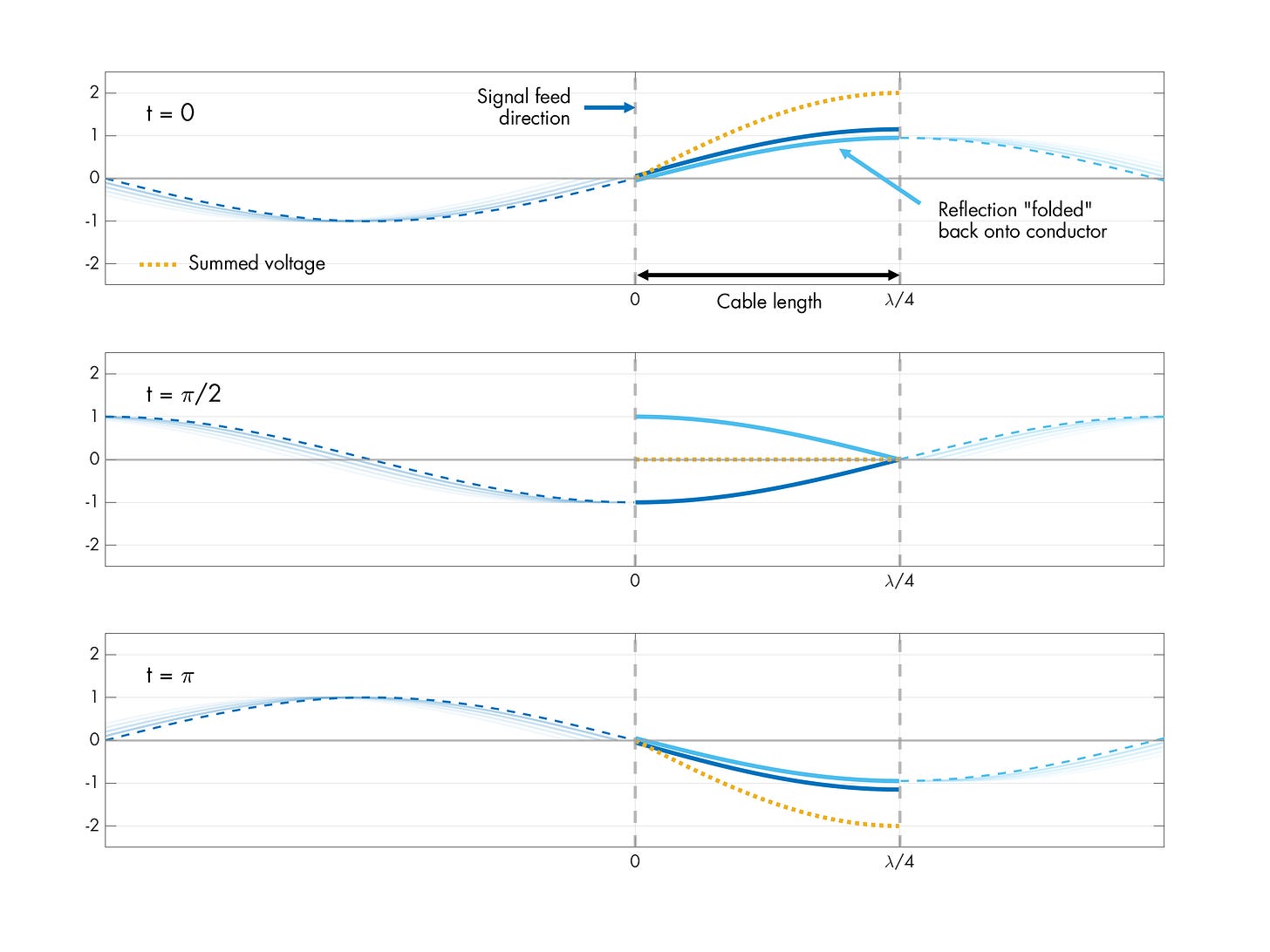

The half-wave dipole has an interesting property: if we take signal propagation delays into account, we can see that every peak of the driving signal reaches the ends of the respective λ/4 rod in a way that adds constructively to the reflection of the previous peak that’s coming back from the high-impedance end:

Each reflection is basically just the previous quarter-wave “folded back” onto each rod. (If you’re iffy on signal reflections, I recommend reviewing this article.)

The following animation shows the pattern of superimposed signal and reflections within a single rod. The actual span of the rod is marked by vertical lines. The light blue line is the reflection, which is mirrored back onto the rod. The orange line corresponds to the summed voltage:

I also have a second animation that shows both elements of a dipole antenna in action, this time with some modest reflection losses baked in:

Note that the voltage interference pattern is constructive at the far end but destructive near the feed point. This makes it easy to supply significant alternating currents without needing to overcome any substantial pushback force; the antenna presents itself as a surprisingly low electrical impedance.

All dipoles made for odd multiples of half-wavelength (3/2 λ, 5/2 λ, …) exhibit this resonant behavior. Similar resonance is also present at even multiples (1 λ, 2 λ, …), but the standing wave ends up sitting in the wrong spot — constantly getting in the way of driving the antenna rather than aiding the task.

Other antenna lengths are not perfectly resonant, although they might be close enough. An antenna that’s way too short to resonate properly can be improved with an in-line inductor, which adds some current lag. You might have seen antennas with spring-like sections at the base; the practice called electrical lengthening. It doesn’t make a stubby antenna perform as well as a the real deal, but it helps keep the input impedance in check.

Now that we’re have a general grasp of half-wave dipoles, let’s have a look at the animation of actual electric field around a half-wave antenna:

Note the two dead zones along the axis of the antenna; this is due to destructive interference of the electric fields in this axis.

Next, let’s consider what would happen if we placed an identical receiving antenna some distance away from the transmitter. Have a look at receiver A on the right:

It’s easy to see that the red dipole is “swimming” through a coherent pattern alternating electric fields: the blue region is pulling electrons toward the upper plate, and yellow pushing them down. The antenna experiences back-and-forth currents between its poles at the transmitter’s working frequency. Further, if the antenna’s length is chosen right, there should be constructive interference of the induced currents too, eventually resulting in much higher signal amplitudes.

The illustration also offers an intuitive explanation of something I didn’t mention before: that dipoles longer than ½ wavelength are more directional. If you look at receiver B on the left, it’s clear that even a minor tilt of a long dipole results in the ends being exposed to opposing electric fields, yielding little or no net current flow.

Not all antennas are dipoles, but most operate in a similar way. Monopoles are just a minor riff on the theme, trading one half of the antenna for a connection to the ground. More complex shapes usually crop up as a way to maintain resonance at multiple frequencies or to fine-tune directionality. You might also bump into antenna arrays; these devices exploit patterns of constructive and destructive interference between digitally-controlled signals to flexibly focus on a particular spot.

The ups and downs of signal modulation

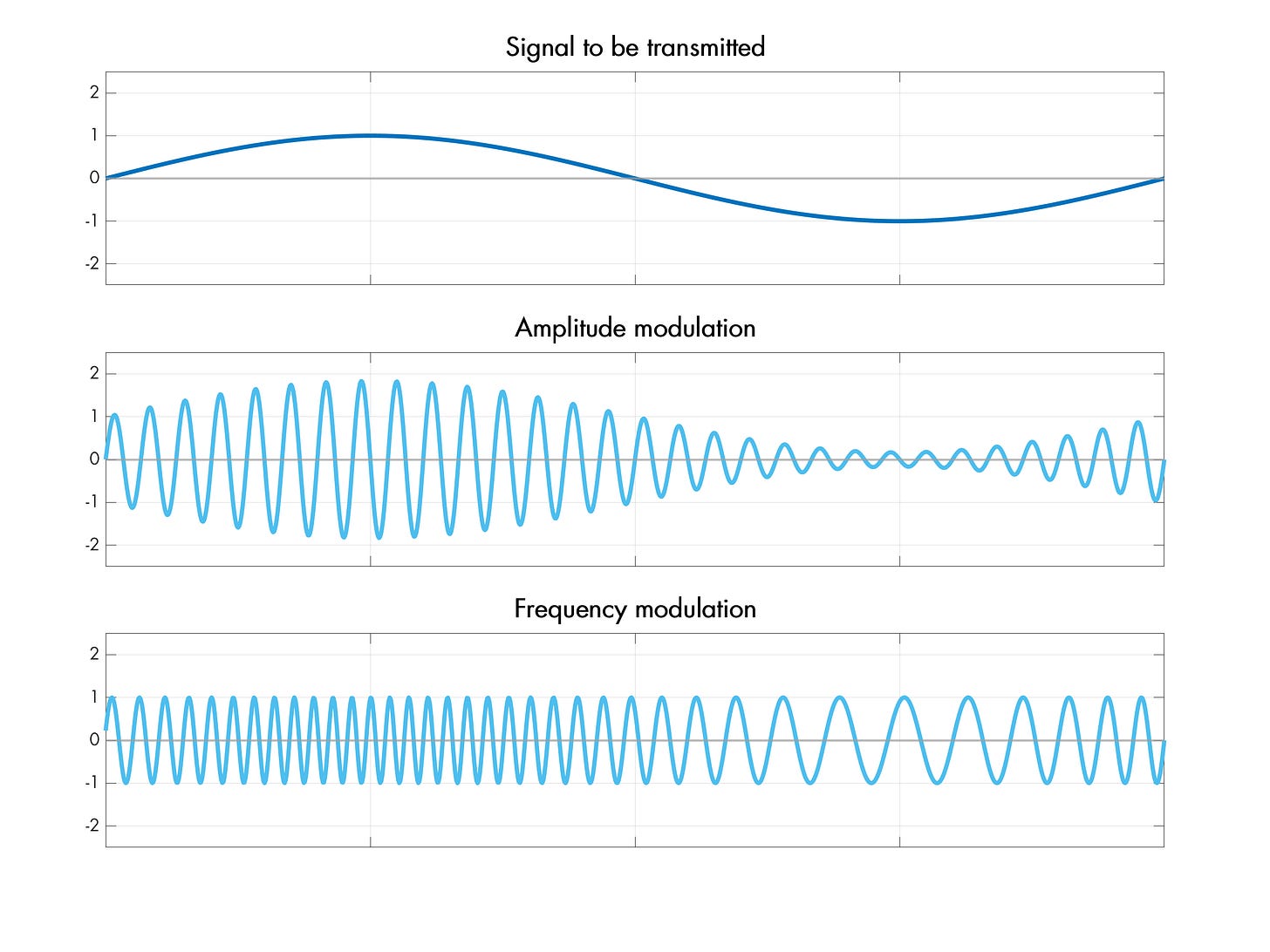

Compared to antenna design, signal modulation is a piece of cake. The two classics are amplitude modulation (AM), which changes the carrier’s amplitude to encode information; there’s frequency modulation (FM), which shifts the carrier up and down:

On the more advanced end, there’s phase modulation (PM) and quadrature amplitude modulation (QAM), the latter of which robustly conveys information via the relative amplitude of two signals with phases offset by 90°.

In any case, once the carrier signal is isolated, demodulation is typically pretty easy to figure out. For AM, the process can be as simple as rectifying the amplified sine wave with a diode, and then running it through a lowpass filter to obtain the audio-frequency envelope. Other modulations are a bit more involved — FM and PM benefit from phase-locked loops to detect shifts — but most of it isn’t rocket surgery.

Still, there are two finer points to bring up about modulation. First, the rate of change of the carrier signal must be much lower than its running frequency. If the modulation is too rapid, you end up obliterating the carrier wave and turning it into wideband noise. The only reason why resonant antennas and conventional radio tuning circuits work at all is that almost nothing changes cycle-to-cycle — so in the local view, you’re dealing with a nearly-perfect, constant-frequency sine.

The other point is that counterintuitively, all modulation is frequency modulation. Intuitively, AM might feel like a clever zero-bandwidth hack: after all, we’re just changing the amplitude of a fixed-frequency sine wave, so what’s stopping us from putting any number of AM transmissions a fraction of a hertz apart?

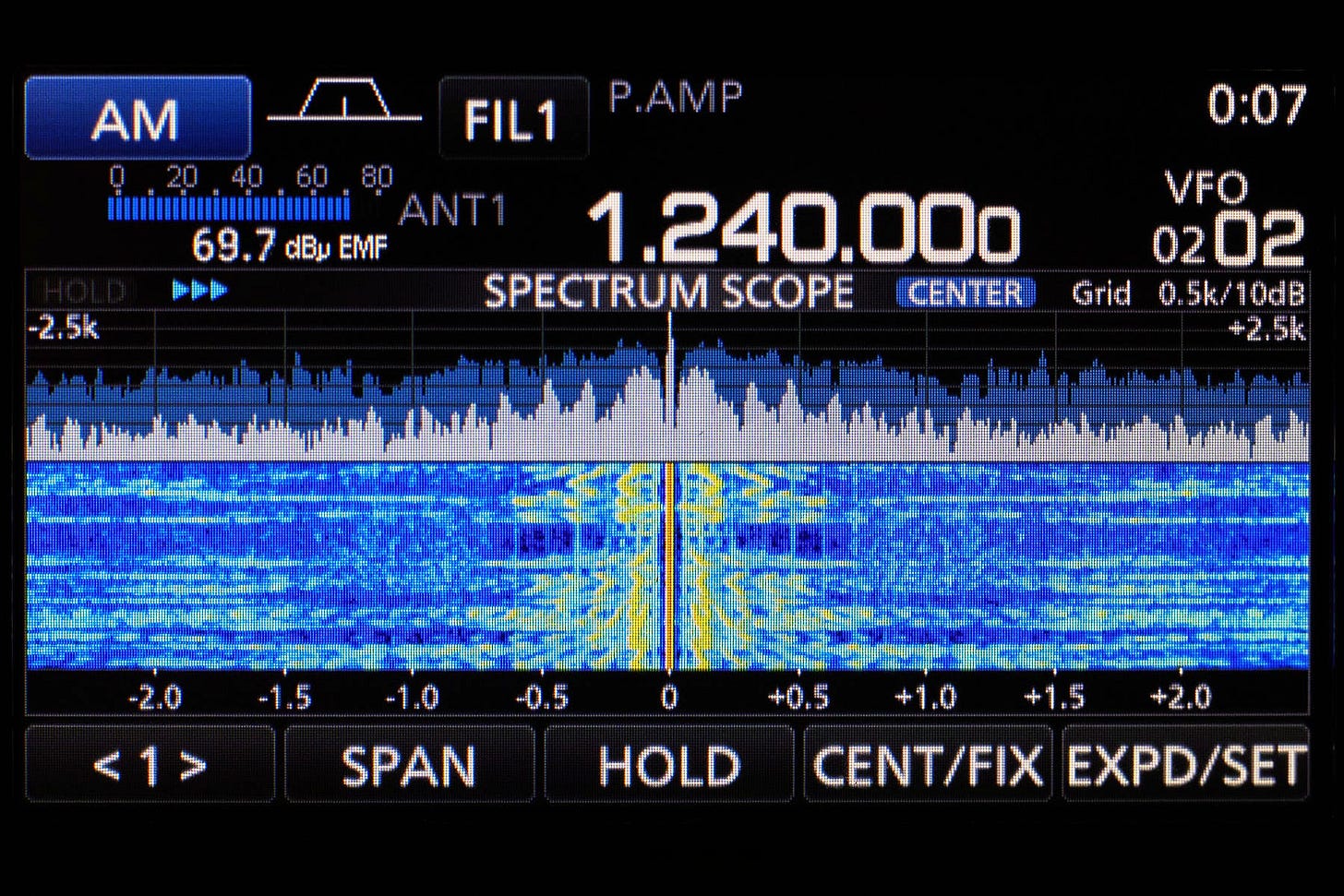

Well, no dice: recall from the discussion of the Fourier transform that any deviation from a steady sine introduces momentary artifacts in the frequency domain. The scale of the artifacts is proportional to the rate of change; AM is not special and takes up frequency bandwidth too. To illustrate, here’s a capture of a local AM station; we see audio modulation artifacts spanning multiple kHz on both sides of the carrier frequency:

We’ll have a proof of this in a moment. But broadly speaking, all types of modulation boil down to taking a low-frequency signal band — such as audio — and transposing it in one way or another to a similarly-sized slice of the spectrum in the vicinity of some chosen center frequency. The difference is the construction method, not the result.

At this point, some readers might object: the Fourier transform surely isn’t the only way to think about the frequency spectrum; just because we see halos on an FFT plot, it doesn’t mean they’re really real. In an epistemological sense, this might be right. But as it happens, radio receivers work by doing something that walks and quacks a lot like Fourier…

Inside a superheterodyne receiver

The basic operation of almost every radio receiver boils down to mixing (multiplying) the amplified antenna signal with a sine wave of a chosen frequency. As foreshadowed just moments ago, this is eerily similar to how Fourier-adjacent transforms deconstruct complex signals into individual frequency components.

From the discussion of the discrete cosine transform (DCT) in the earlier article, you might remember that if a matching frequency is present in the input signal, the multiplication yields a waveform with a DC bias proportional to the magnitude of that frequency component. For all other input frequencies, the resulting waveforms average out to zero, if analyzed on a sufficiently long timescale.

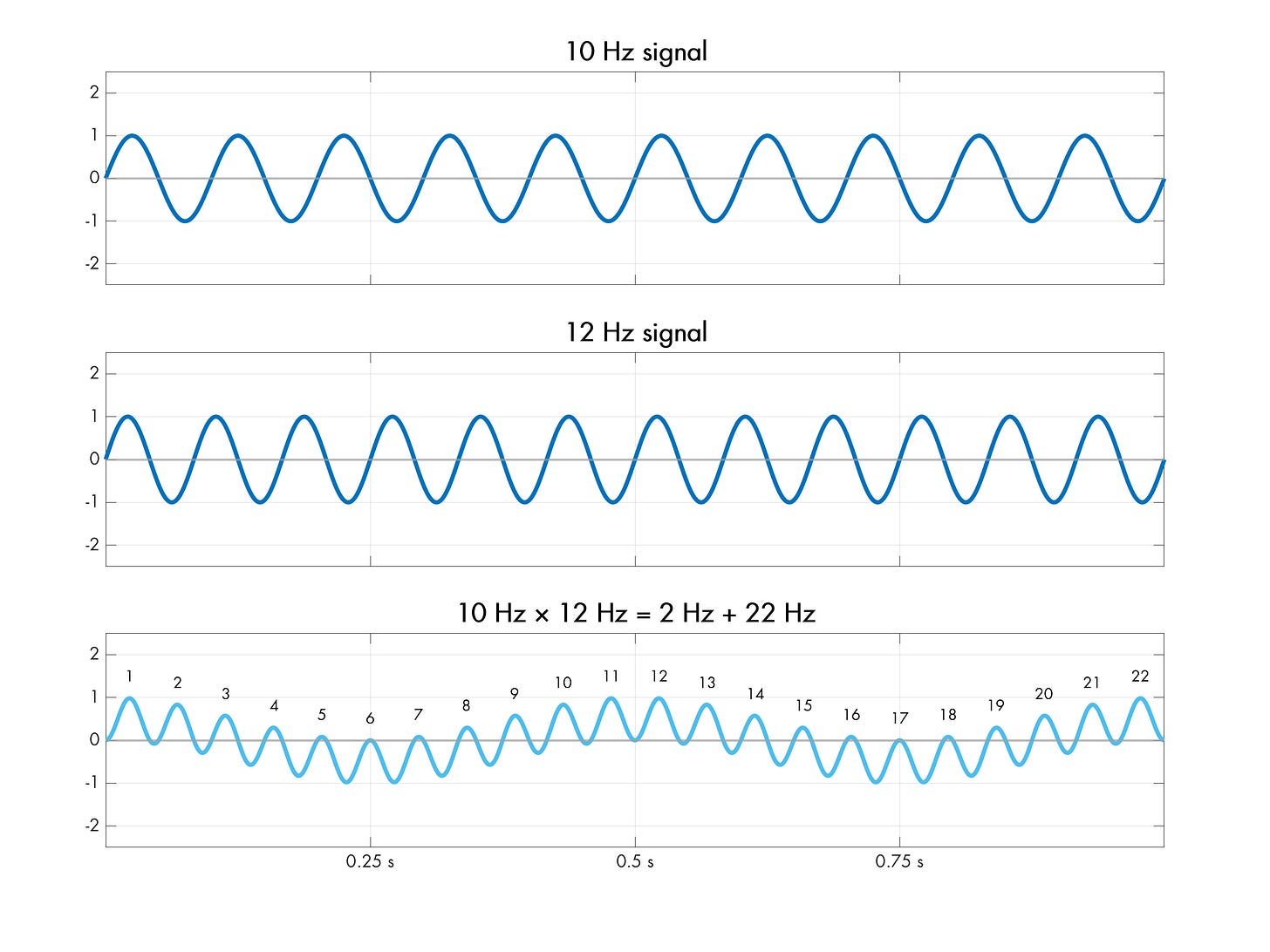

In the aforementioned article, we informally noted that the resulting composite waveforms have shorter periods if the original frequencies are far apart, and longer periods if the frequencies are close. Now, a more precise mathematical model is in order. As it turns out, for scalar multiplication, the low-frequency cycle is always |f1 - f2|, superimposed on top of a (less interesting) high-frequency component f1 + f2:

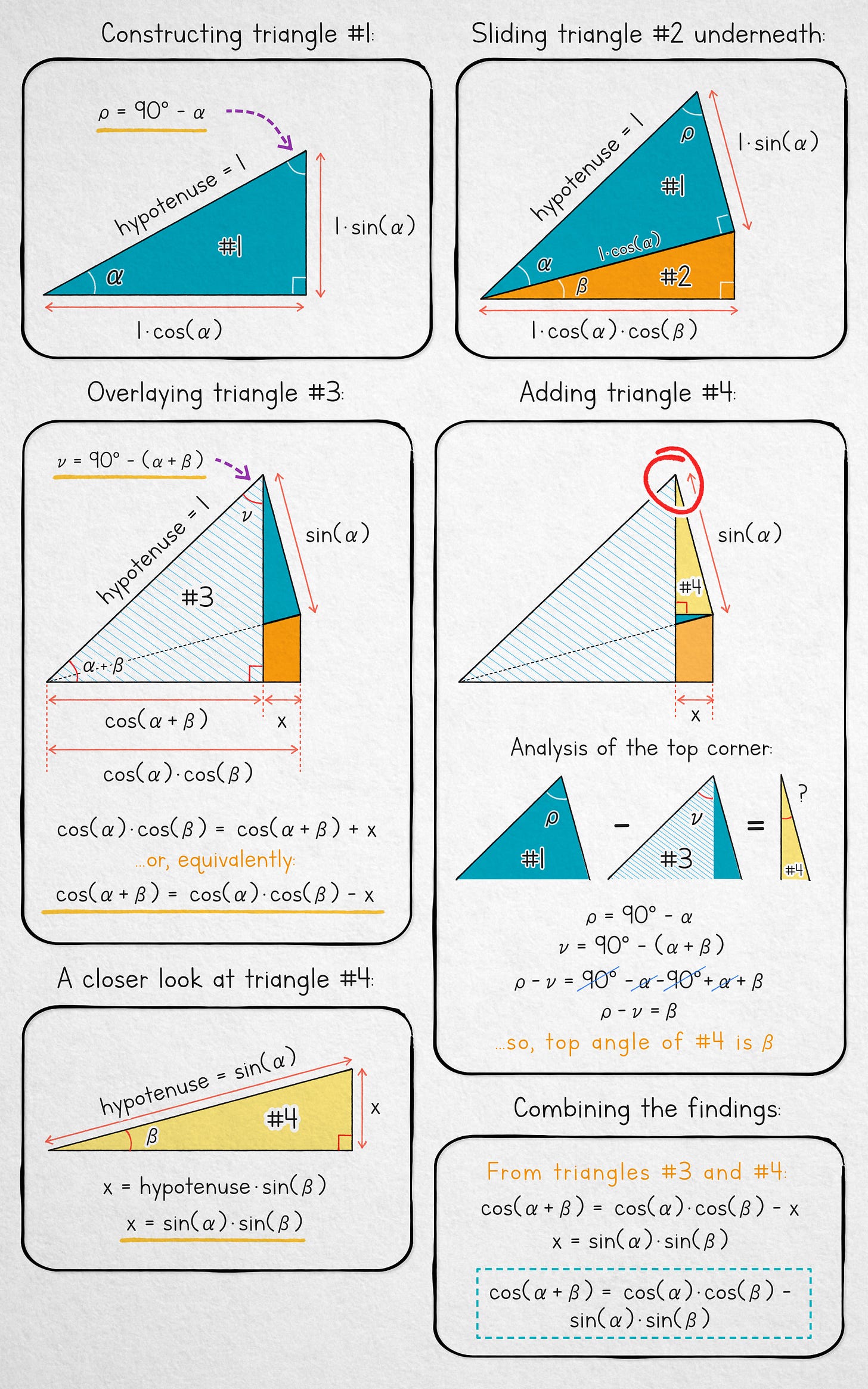

This behavior might seem puzzling, but it arises organically from the properties of sine waves. At its root is the semi-well-known angle sum identity, given by the following formula:

If the formula looks alien to you, we can establish the equality using a pretty cute visual proof:

It’s probably best to zoom in and just walk through the picture, but if you need additional hints, the next couple of paragraphs contain some explanatory text. If the image was enough, skip ahead to paragraph starting with the “fast forward” pictogram (⏩).

Proof narrative: we start with two given angles, α and β. Panel 1 (top left): we construct a right-angle triangle (#1), which has an angle of α and a hypotenuse equal to 1. Next, we calculate the length of the adjacent of that triangle using basic trigonometry — 1 · cos(α). Panel 2 (top right): we slide a second right-angle triangle underneath #1. The new triangle (#2) has an angle of β and a hypotenuse of the same length as the adjacent of triangle #1: cos(α); this makes the adjacent of #2 equal to cos(α) · cos(β).

Panel 3 (middle left): we add another right triangle #3, overlaying it on top of the other two. The new triangle inherits a hypotenuse of 1 from triangle #1, and has a combined angle of α + β as a consequence of being partly inscribed inside #1 and #2. It follows that its adjacent is 1 · cos(α + β).

The bottom edge of the combined figure has a length equal to the known adjacent of #2: cos(α) · cos(β). Alternatively, the length can be expressed as the adjacent of #3 – cos(α + β) – plus some unknown segment, x.

We solve for that mystery segment by looking a new helper triangle (#4). Panel 4 (middle right): we find the top angle of that triangle, which works out to β because it’s the difference between angle ρ calculated in panel #1 and angle υ marked in panel #3. Panels 5 and 6 (bottom): a quick analysis shows that x is equal to sin(α) · sin(β).

⏩ Putting it all together, we obtain the following angle sum identity:

The identity for cos(α + β) can be trivially extended to cos(α - β), because subtraction is the same as adding a negative number:

Now that we have these two formulas, let’s see what happens if we sum cos(α - β) with cos(α + β):

Next, divide both sides by two and flip the expression around; this nets us a formula that equates the product of (i.e., the mix of) two sine frequencies to the sum of two independent cosines running at |f1 - f2| and f1 + f2:

In other words, it describes the exact relationship we’ve been looking at.

We don’t even need to believe in trigonometry. A closely-related phenomenon has been known to musicians for ages: when you simultaneously play two very similar tones, you end up with an unexpected, slowly-pulsating “beat frequency”. Here’s a demonstration of a 5 Hz beat produced by combining 400 Hz and 405 Hz:

The behavior is also a serendipitous way to formalize the earlier observation about AM modulation: this scheme essentially takes a signal running at a carrier frequency a and varies its amplitude by multiplying the carrier by some slower-running sine at a frequency b. From the formula we derived earlier on, the result of this multiplication necessarily indistinguishable from the superposition of two symmetrical sinusoidal transmissions offset from a by ± b, so AM signals take up bandwidth just the same as any other modulation scheme.

In any case, back to radio: it follows that if one wanted to receive transmissions centered around 10 MHz, a straightforward approach would be to mix the input RF signal with a 10 MHz sine. According to our formulas, this should put the 10.00 MHz signal at DC, downconvert 10.01 MHz to a 10 kHz beat (with an extra 20.01 MHz component), turn 10.02 MHz into 20 kHz (+ 20.02 MHz), and so forth. With the mixing done, the next step would be to apply a lowpass filter to the output, keeping only the low frequencies that are a part of the modulation scheme — and getting rid of everything else, including the unwanted f1 + f2 components that popped up around 20 MHz.

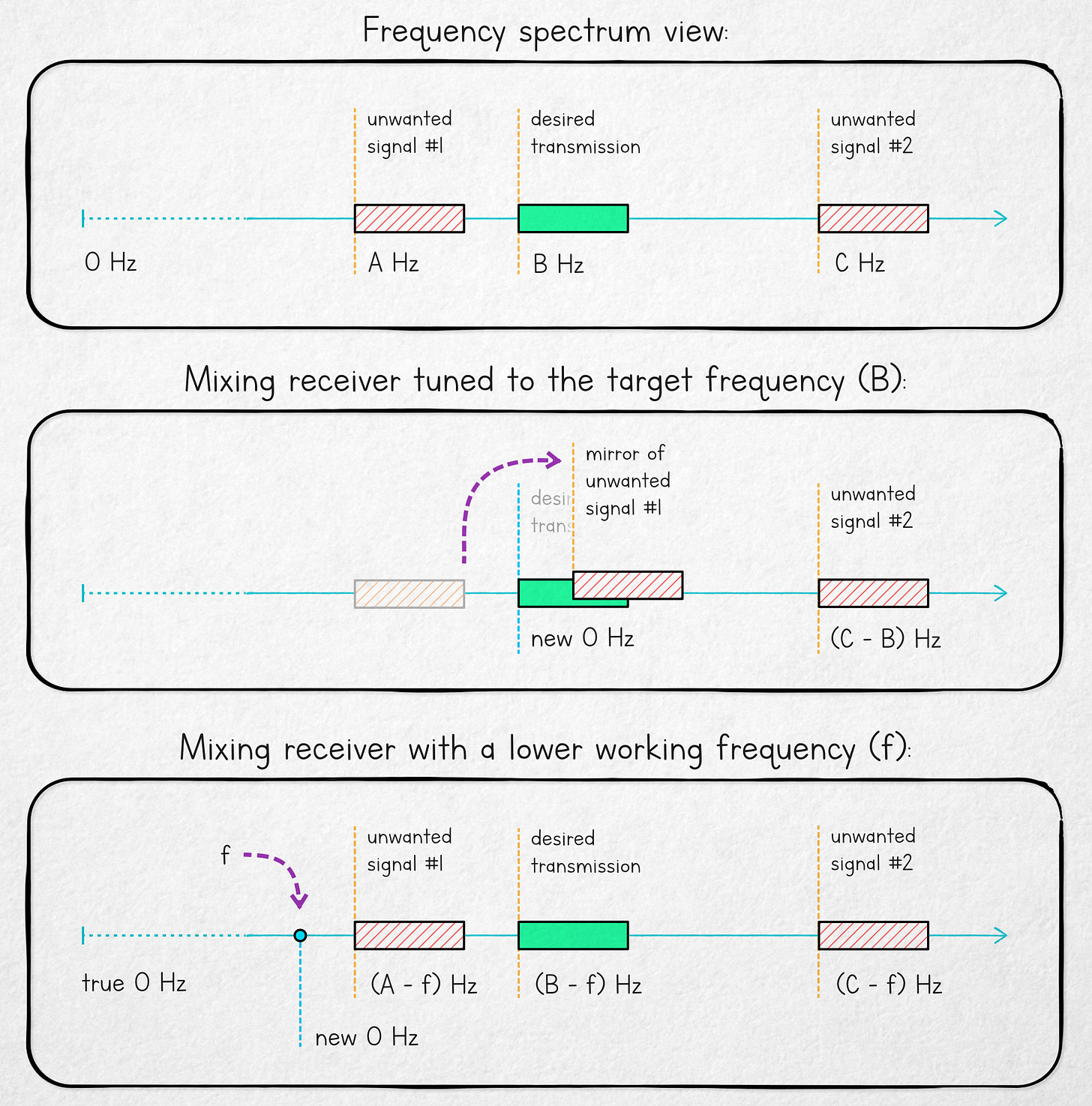

The folly of this method is the modulo operator in the |f1 - f2| formula, a consequence of cos(x) being the same as cos(-x). This causes unwanted input transmissions directly below 10 MHz to produce beats that are indistinguishable from the desirable signals directly above 10 MHz; for example, a component at 9.99 MHz will produce an image in the same place as a 10.01 MHz signal, both ending up at 10 kHz.

To avoid this mirroring, superheterodyne receivers mix the RF input with a frequency f that’s lower than the signal of interest, shifting the transmission to some reasonably low, constant intermediate frequency (IF) — and then using comparatively simple bandpass filters to pluck out the relevant bits. A visualization of the benefits of using this approach is shown below:

In this design — devised by Edwin Armstrong around 1919 and dubbed superheterodyne — the fundamental mirroring behavior is still present, but the point of symmetry (f) can be controlled and placed far away. With this trick up our sleeve, accidental mirror images of unrelated transmissions become easier to manage — for example, by designing the antenna to have a narrow frequency response and not pick up more distant frequencies at all, or by putting a coarse filter in front of the mixer. The behavior of superheterodynes is sometimes taken into account for radio spectrum allocation purposes, too.

👉 For a thematic catalog of articles on electronics, click here.

I write well-researched, original articles about geek culture, electronic circuit design, algorithms, and more. This day and age, it’s increasingly difficult to reach willing readers via social media and search. If you like the content, please subscribe!

I landed on this post via a link to your other post on snowblowers from HN.

What you mentioned here--"AM might feel like a clever zero-bandwidth hack: after all, we’re just changing the amplitude of a fixed-frequency sine wave"--is one of the things one of the things that was hard for me to wrap my head around when I first studied radio frequency in high school.

I had tried asking on [stackexchange](https://electronics.stackexchange.com/questions/438512/why-do-ook-transmissions-have-bandwidth) a while back, and basically got the same answer, but less clearly stated. Moreover, most of the books I read at the time didn't mention this question at all, so I was glad to see you clarify the explanation here.

Nice explanation! I wrote an article on a similar topic, and tells the story of Armstrong.

https://open.substack.com/pub/viksnewsletter/p/how-a-superheterodyne-transceiver?r=222kot&utm_medium=ios