Unreal numbers

Reals are real weird.

A while ago, I posted an article about the 19th and early 20th century quest to derive mathematics from the principles of formal logic. We kicked off with Peano arithmetic, which built natural numbers from two ad-hoc constructs: an element representing zero and a recursive successor function S(…).

Later on, we leaned on set theory to encode the underlying structure of these symbols. This netted us a hierarchy of set-theoretic natural numbers known as ordinals. It also led to an interesting insight: if we allowed the existence of infinite sets, then the set of all natural numbers (ℕ) itself had the structure of an ordinal. In the article, we labeled this infinite number ω and demonstrated that it could be manipulated using the same arithmetic rules as finite numbers, but that it sometimes behaved in wacky ways. For example, we established that ω + 1 ≠ 1 + ω.

We also touched on various methods of reasoning about the magnitude of ordinals and showed that they diverge from each other in the realm of infinities. In particular, we talked about Georg Cantor’s notion of cardinality, which put many distinct infinite ordinals in the same size class, but indicated that there’s a fundamental difference in scale between the set of natural numbers and the set of reals (ℝ).

If you haven’t read the article but are intrigued, I strongly encourage you to give it a go (link). I think it’s an excellent and accessible introduction; if you need an endorsement, the brain trust over at HN called it “watered down” and “slop”.

If you followed the article, there might be one thing that’s bugging you: we carefully defined natural numbers from first principles, but then pulled reals out of a hat. This is a gap worth addressing, because as it turns out, real numbers are profoundly weird.

Natural numbers (ℕ)

As a quick recap, in the earlier article, we defined the relationship between labels such as “1”, “2”, or “3” by choosing an object that represented zero and then recursively applying a successor function:

In Peano arithmetic, the label “0” and the successor function had no explicit structure. In the set-theoretic approach we switched to later, we defined them with more precision. That said, this distinction isn’t relevant right now.

The important point is that in both models, we could lean on the successor function to define the behavior of the “+” operator using the following two rules:

This ruleset allows us to solve problems such as 2 + 2 without any prior assumptions about what “2” or “+” might mean. First, because “2” is defined as S(1), 2 + 2 is the same as 2 + S(1). This form allows us to apply rule #2, restating 2 +2 as S(2 + 1):

After this, we can apply the same reasoning again to expand the nested 2 + 1 sum:

We now have a doubly-nested sum involving zero, so we can apply rule #1, get rid of the sum, and “unwind” the successor functions to arrive at the result:

Again, if you’re interested in a more detailed walkthrough, including C code that explains the process in programmer-friendly terms, check out the article linked earlier on.

What we haven’t covered before is that we can use a similar approach to recursively define multiplication for natural numbers:

In effect, without having to explicitly define subtraction, the we’re saying that a · b can be rewritten as a · (b-1) + a, and that this expansion should be continued until we get to a · 0. At that point, the multiplication part works out to zero, so we just unwind the stack and gather all the “+ a” terms. This will come in handy in a while.

Integers (ℤ)

A major hurdle on our path toward a complete system of arithmetic is that natural numbers can’t represent negative values. This means that if we attempt to define subtraction, many results will not have an in-system representation, throwing a wrench in the works.

The usual way to conjure negative numbers from ℕ is to define a new hierarchy of integer numbers, each consisting of an ordered pair of naturals: (a, b). The goal is to use the pair to represent a difference between the underlying terms; that is, integer +5 could be encoded as (5, 0) while integer -5 would become (0, 5).

You might be wondering if we just pulled the concept of an “ordered pair” out of thin air. Yes and no! It’s new here, but in set theory, these pairs map to normal sets, except we structure the mapping so that (a, b) differs from (b, a). This can be done in a number of ways, but a common approach devised by Kazimierz Kuratowski is:

Coming back to the earlier trick, the elements of each pair are natural numbers, so we already know how to add them. This lets us define the addition rules for pair-based integers as:

Because each integer pair represents a difference between two natural numbers, multiplication of these pairs follow the conceptual pattern of (a - b) · (c - d) = ac - ad - bc + bd. Splitting out the positive and negative terms to construct the resulting integer, we get:

These rules work, sort of. For example, adding integer +5 and -5 nets us:

The result (5, 5) seems to be saying “zero”, but is not what we’d choose as the canonical representation of that value; we would have preferred (0, 0). More generally, we’d say that any two pairs (a, b) and (c, d) represent the same integer if a - b = c - d. Yet, our system doesn’t take this property into account.

Because we still haven’t defined subtraction, we must first shuffle the terms around to express the a - b = c - d criterion in terms of addition:

With this pair equivalence relationship defined, we assign labels such as “+5” not to a specific pair, but to an entire equivalence class: a collection of ordered pairs that satisfy the same criteria. In this model, integers look the following way:

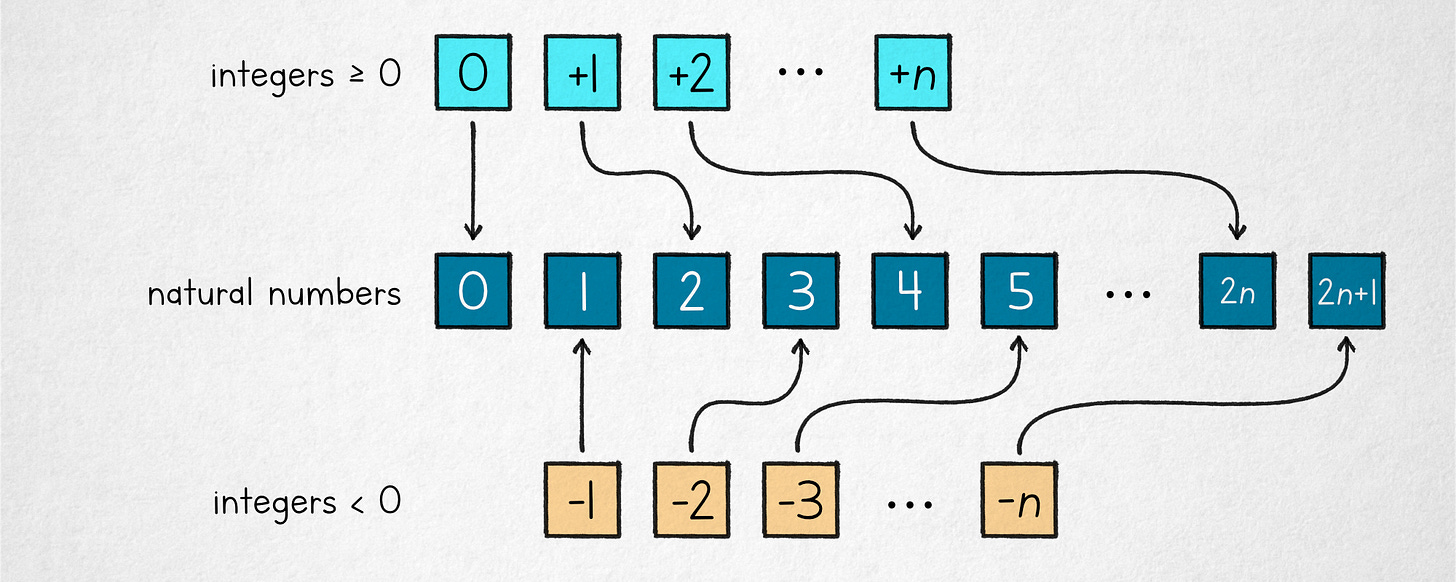

We’re mostly done with integers, but before we wrap up, let’s ponder if the set of integers is “larger” than the set of natural numbers. By some metrics, you could argue it is. That said, as hinted in the earlier article, every integer can be mapped to a natural number without the risk of running out of naturals:

Because this mildly mind-bending approach is conceptually possible, we say that the sets have the same cardinality.

Rationals (ℚ)

Rational numbers represent values that can be expressed as a ratio of two integers: a / b. In the previous section, we defined integers using an ordered pair that effectively encoded subtraction: a - b. So, here’s the cool part: nothing stops us from taking two integers and fashioning them into a new type of a pair that encodes division. Each of these pairs forms a single rational number.

In this model, we want pairs (a, b) and (c, d) to be equivalent if a / b = c / d. We don’t have division defined yet, but we know how to multiply integers, so we can restate this equivalence rule as:

This nets us the following taxonomy:

The multiplication rule for two pairs representing rational numbers boils down the multiplication of the underlying integers:

The addition of these pairs is equally straightforward and follows the normal a/b + c/d = (ad + cb) / bd pattern:

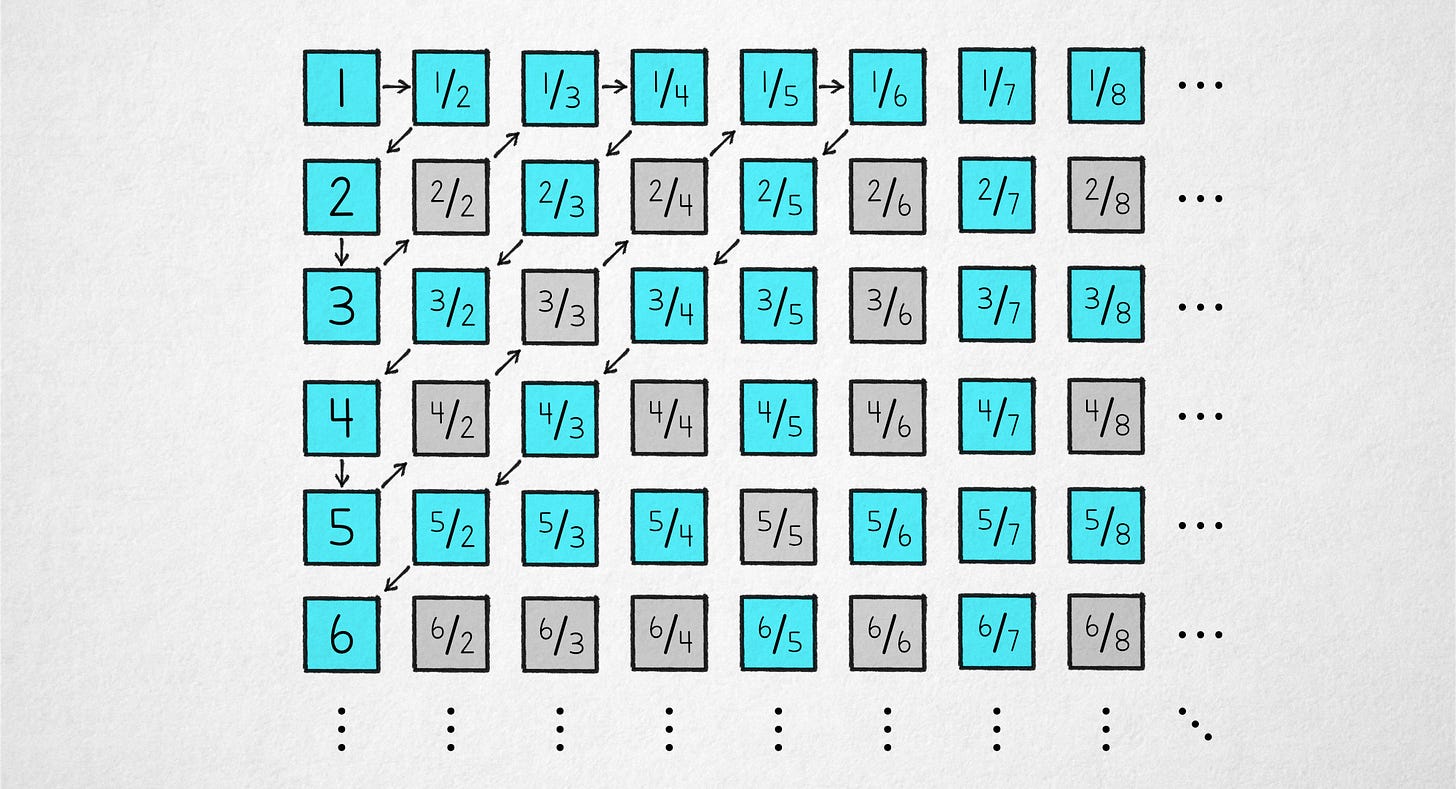

What’s the “size” of ℚ? Well, again, depends on how we look at it, but we can show that the cardinality of is not greater than ℕ. One visual approach is to construct a two-dimensional array of fractions in the form of x/y:

It should be evident that because x and y coordinates cycle through every possible natural number, the array contains all positive rational fractions. Some of the tiles are redundant (e.g., 2 is the same as 4/2), but this is not important for the proof.

With the rationals laid out, we can traverse this grid in a way that lets us assign every tile to an integer without leaving any gaps and without ever running out of members of ℕ. The start of one such traversal pattern is indicated by arrows in the figure. We start in the top left corner, move one tile to the right, take a a sharp turn to and start moving diagonally until we hit the vertical edge, move one tile down, and then follow a diagonal pattern back. Rinse, repeat. By analogy to what we’ve done for integers, the result doesn’t change if we toss negative rationals into the mix.

Computable numbers

Not every number can be expressed as an integer fraction. The two examples of irrational numbers that every reader should be familiar with are √2, which can be expressed in polynomial terms, and π, which cannot.

Although these numbers can’t be represented as simple fractions, they can be explained in terms of an algorithm you need to follow to approximate them to an arbitrary degree. For example, the sum of the following terms starting at n = 0 will slowly but surely converge to π as the count of the summed elements grows:

Within the bounds of the precision of floating-point numbers, you can observe the behavior by running the following C code (demo link):

#include <stdio.h> #include <stdint.h> int main() { double sum = 0; for (uint64_t n = 0, pos = 0; n <= 16384; n++) { sum += 8.0 / ((4*n + 1) * (4*n + 3)); if (n >> pos) { printf("[%5ld] %.05f\n", n, sum); pos++; } } }

At first blush, it would appear that any well-specified irrational number of our choice can be expressed as a finite algorithm. This leads to a concept that should appeal to any geek: computable numbers. These are numbers that can be approximated to an arbitrary precision in finite time by a theoretical model of a computer known as a Turing machine. In effect, the number is the algorithm (or the equivalence class of algorithms).

Interestingly, the cardinality of the set of computable numbers is still the same as the cardinality of ℕ. An intuitive explanation is that there are only as many computable numbers as there are Turing machines that generate them. The ruleset of every Turing machine can be encoded as a finite natural number — you could just use a representation of an ASCII string — so we’re still in the realm of countable infinities.

Reals (ℝ)

Of course, we don’t teach about computable numbers in school. Instead, the most common “upgrade” from ℚ are reals: an idealized continuum on which, to put in a hand-wavy way, every number exists whether we know how to algorithmically approximate it or not.

Of course, my phrasing is severely deficient. It’s not a free-for-all: 🥔 (a potato) is not a real number, and to avoid a variety of complications, neither is √-1. The set ℝ extends ℚ, but it does so only in the immediate vicinity of rationals. Pick any real and I can find a rational fraction that’s arbitrarily close.

To describe the underlying structure of real numbers, we can turn to Dedekind cuts. Informally, the idea is that we can unambiguously identify each real number by associating it with the set of all rationals that come before it. To describe real number x, we could take the set of rational numbers and partition it into an ordered pair of sets (A, B), such that set A contains every rational less than x and set B contains every rational equal or greater than x.

At first blush, this description may seem circular: to build the representation of a real number, we need to know where to make the cut. That said, the point isn’t that Dedekind’s method lets you find the exact spot for π. It’s that the universe of real numbers is built by taking every possible Dedekind cut of ℚ. The numbers are the cuts.

To be fair, in some cases, the partition can be well-defined even when dealing with irrationals. For example, to build the cut (A, B) representing √2, we can say that set A consists of every rational q such that q² < 2 (plus q < 0). We can work with that, but again: the existence of a real number doesn’t depend on us knowing where to make the cut.

Once we have numbers expressed in terms of Dedekind cuts, we can define arithmetic operations, too. For example, to add real (A, B) to (C, D), we construct a new number (E, F) such that for every rational a selected from A and every rational c selected from C, the sum a + c is placed in E. In the same vein, for every b in B and d in D, the sum b + d goes into F.

I’ll spare you the abstract set notation, but as a practical example, if (A, B) represents a cut at 2 and (C, D) represents a cut at 3, we know that every a selected from A will be less than 2 and every c chosen from C will be less than 3. Therefore, every a + c value placed in E will be always less than 5. In the same vein, every b + d that goes into F will be greater or equal than 5. That’s the same as the cut representing the real number 5.

We now have continuum that contains numbers that are allowed to exist regardless of whether they can be described by an effective, finite procedure. As an unexpected consequence, the cardinality of ℝ is higher than ℕ.

We explored this property in the earlier article, but to briefly recap the argument, imagine an arbitrary, infinite mapping that assigns every integer to a real:

For every real number in the mapping, I underlined a successive decimal position. Equipped with this, we can imagine a new real that could be built by looking at each of the underlined digits and then placing a different digit in the corresponding position of the newly-constructed value.

By construction, this new real necessarily differs by at least one digit from every existing entry in the mapping. This means we still have a value — or really, an infinite supply of values — left over after assigning every natural number to a real. It would appear that there is a fundamentally higher “number” of reals than naturals — an uncountable infinity.

So what?

Well… from the earlier discussion, recall that the cardinality of computable numbers was the same as the cardinality of ℕ. The cardinality of ℝ — the “magnitude” of the set of reals — is fundamentally greater than that. In other words, we could assert that most reals are not computable.

But what would be an example of an uncomputable number? That’s a good question. Most obviously, we could be talking about numbers that encode the solution to the halting problem. It would lead to a paradox to have a computer program that allows us to decide, in the general case, whether a given computer program halts. So, if a procedure to approximate a particular real necessarily requires solving the halting problem, we couldn’t have a computer that does that.

If you’re interested in a more thorough exploration of the idea, check out my earlier article on busy beavers and the limits of algorithmic knowledge. But to cut to the chase, there are those who believe that the universe is functionally a computer — that is, that its rules are deterministic and can be simulated by a Turing machine. If so, that would imply that uncomputable numbers can’t be zeroed in on by any physical process, including human thought. They would be truly out of reach… and again, this would apply to almost every member of ℝ.

Cue the Twilight Zone theme music — and see you in a bit.

Good article. The real numbers are so weird as to raise the question: Is reality rational but not real?

Once again a marvelous article.

Thank you man. You make learning these issues so intuitive.